Watch on YouTube, listen on Apple Podcast, Spotify, or add our RSS feed to your favorite player!

When Chris Lattner first came on the podcast, we titled the episode “Doing it The Hard Way”. Rather than simply building an inference platform and posting charts showing GPUs going brrr, they went down to the compiler level and started rebuilding the whole stack from scratch.

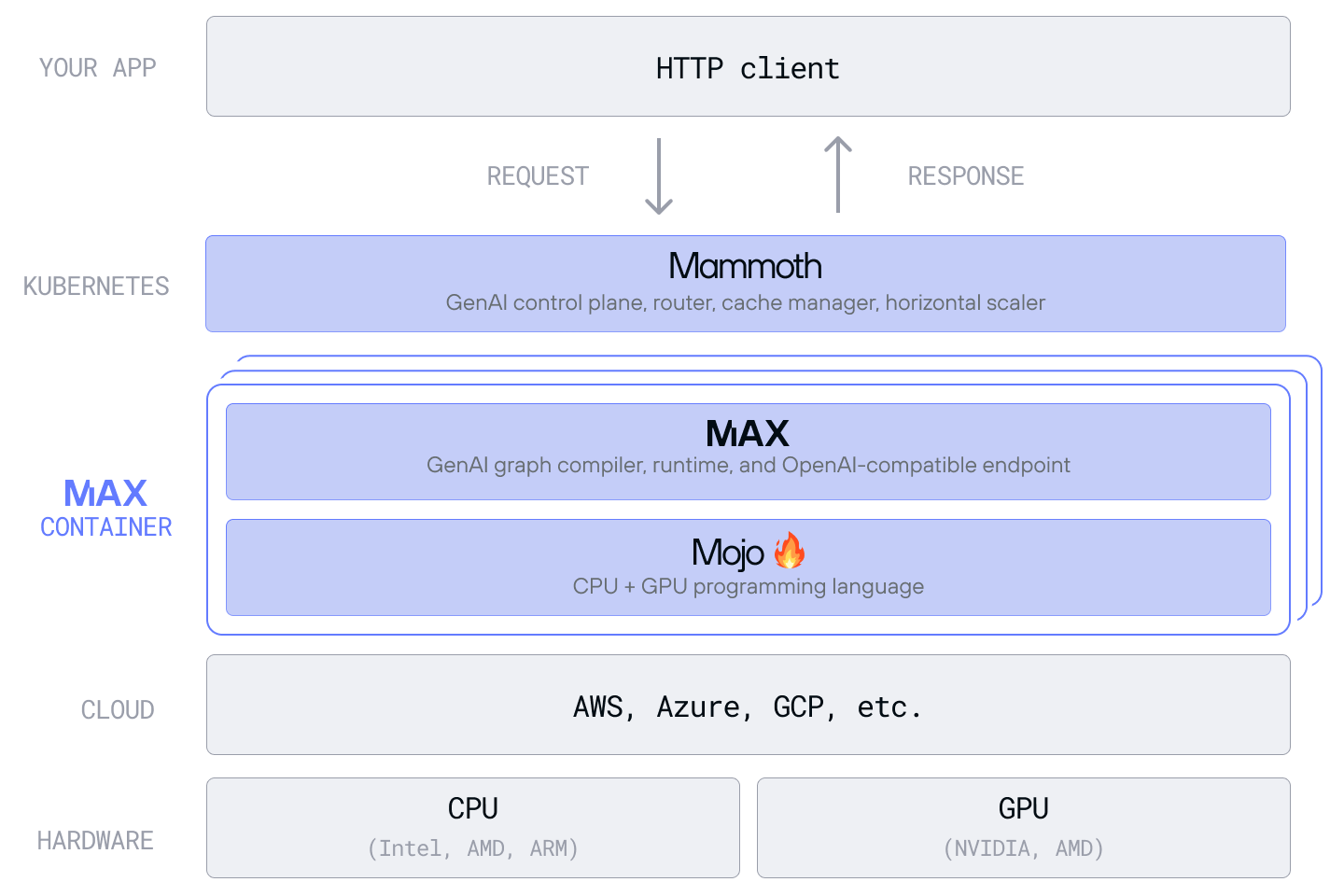

Modular is being built as “concentric circles”, as Chris calls them. You drop into the layer you need; everything lower is optional plumbing you never touch. We covered a lot of the Mojo design in the previous episode, so refer to that if you need a refresher. At the core of its uniqueness, there’s one thing: Mojo exposes every accelerator instruction (tensor cores, TMAs, etc.) in a Python‑familiar syntax so you can:

- Write once, re‑target to H100, MI300, or future Blackwell.

- Run 10‑100× faster than CPython; usually beats Rust on the same algo.

- Works as a zero‑binding extension language:

import my_fast_fn.mojoand call from normal Python.

This removes the need for any Triton/CUDA kernels and C++ “glue” code.

The same approach has been applied to the MAX inference platform:

- Max’s base image is ≈ 1 GB because it strips the Python dispatch path.

- Supports ~500 models with pre-built containers which include all the optimizations needed like Flash‑Attention, paged KV, DeepSeek style MLA, etc.

- Most importantly they ship nightly builds to really get the open source community involved

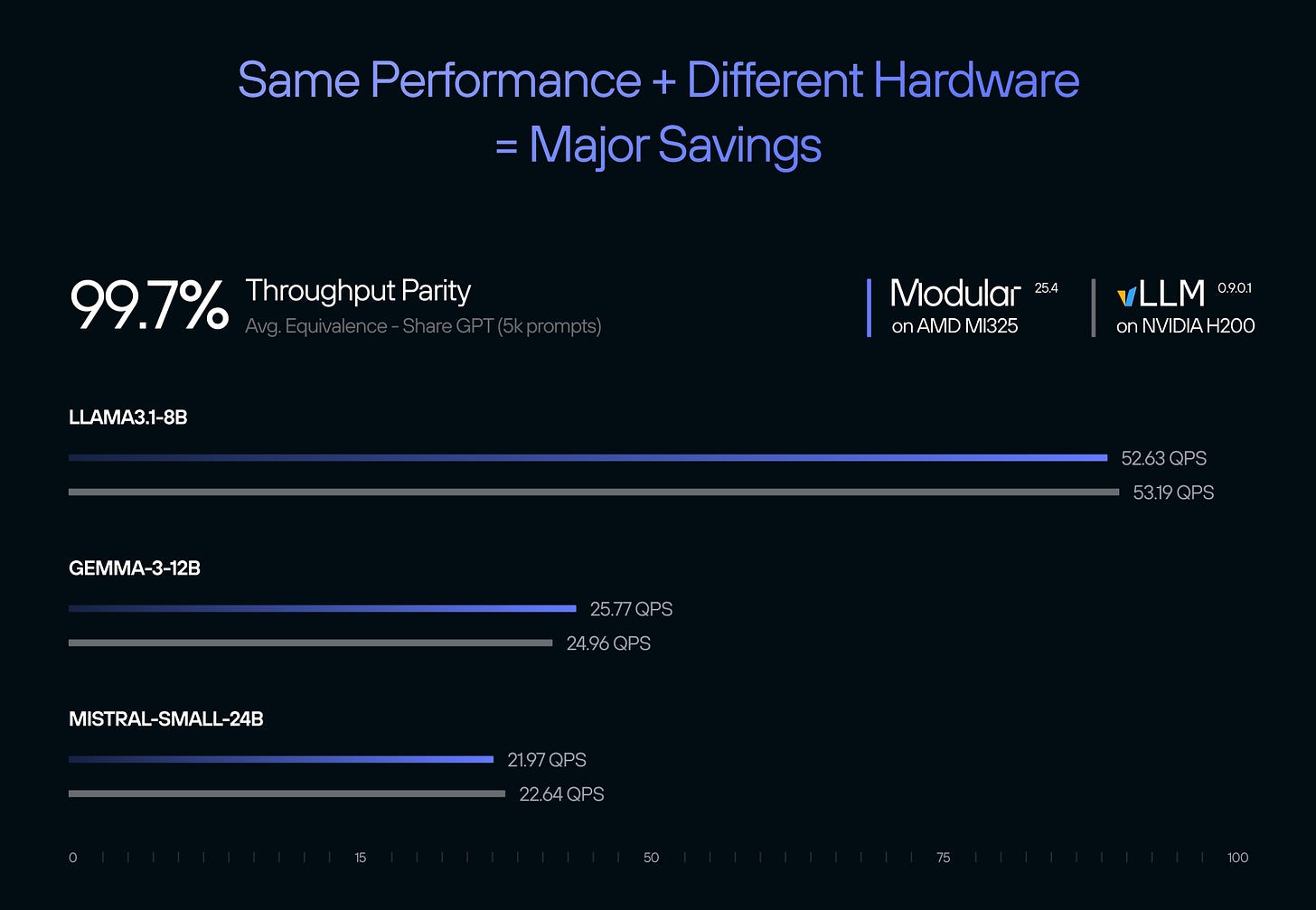

This week, they had a big announcement with AMD showing that they are ~matching MI325 performance with H200s running on vLLM on some of the more popular open source models. This is a huge step forward in breaking up NVIDIA’s dominance (you can also listen to our geohot episode for more on AMD’s challenges).

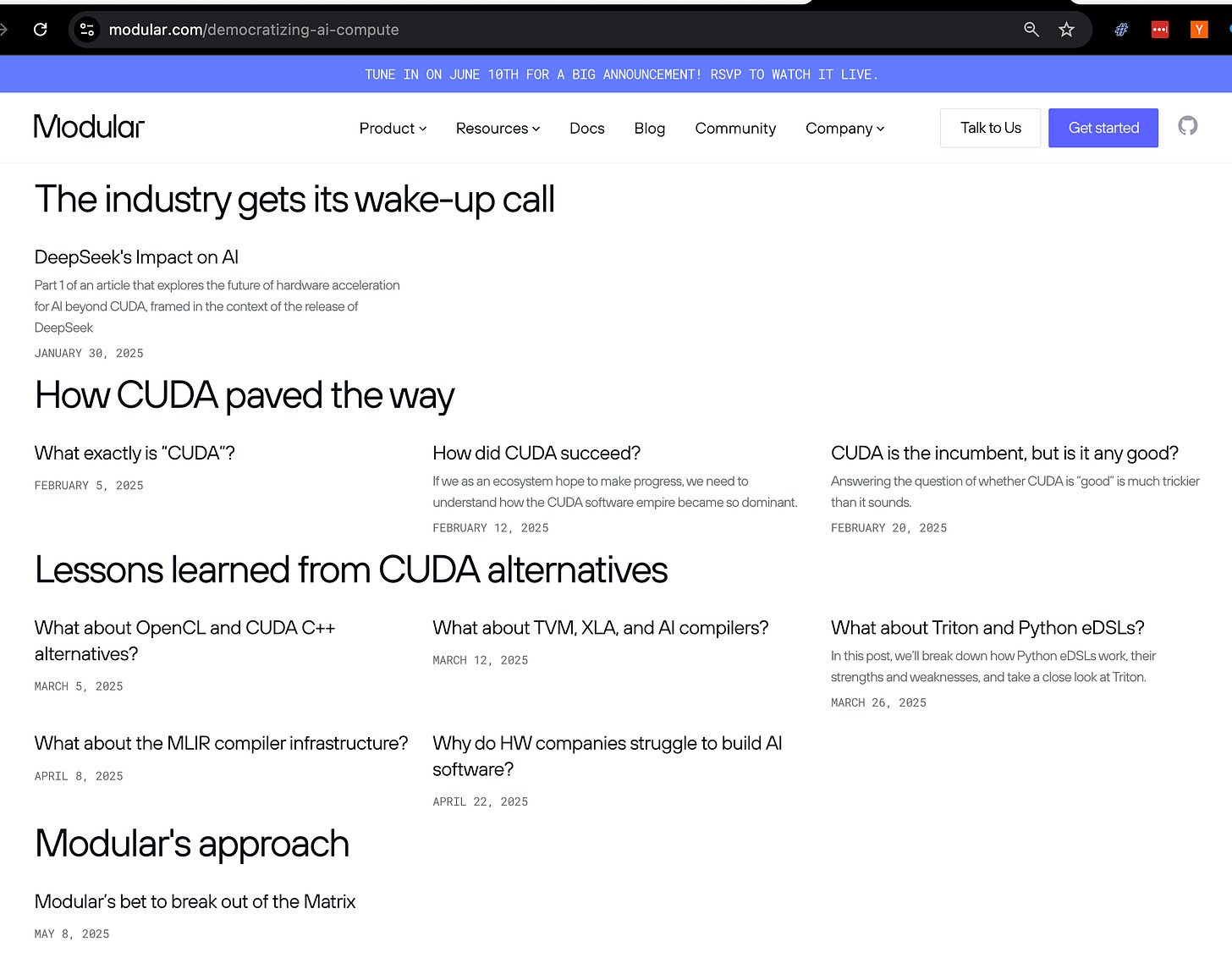

Chris has also been on a blogging bonanza with his Democratizing AI Compute series, at once a masterclass in the problems CUDA solves, but also the most coherent pitch for Modular’s approach: https://www.modular.com/democratizing-ai-compute

Chris already replaced GCC with LLVM and Objective‑C with Swift; is Mojo replacing CUDA next? We talked through what it’s needed to make that happen in the full episode. Enjoy!

Show Notes

Timestamps

- [00:00:00] Introductions

- [00:00:12] Overview of Modular and the Shape of Compute

- [00:02:27] Modular’s R&D Phase

- [00:06:55] From CPU Optimization to GPU Support

- [00:11:14] MAX: Modular’s Inference Framework

- [00:12:52] Mojo Programming Language

- [00:18:25] MAX Architecture: From Mojo to Cluster-Scale Inference

- [00:29:16] Open Source Contributions and Community Involvement

- [00:32:25] Modular's Differentiation from VLLM and SGLang

- [00:41:37] Modular’s Business Model and Monetization Strategy

- [00:53:17] DeepSeek’s Impact and Low-Level GPU Programming

- [01:00:00] Inference Time Compute and Reasoning Models

- [01:02:31] Personal Reflections on Leading Modular

- [01:08:27] Daily Routine and Time Management as a Founder

- [01:13:24] Using AI Coding Tools and Staying Current with Research

- [01:14:47] Personal Projects and Work-Life Balance

- [01:17:05] Hiring, Open Source, and Community Engagement

Transcript

Alessio [00:00:05]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel, and I'm joined by my co-host Swyx, founder of SmolAI.

Overview of Modular and the Shape of Compute

Swyx [00:00:12]: And we're so excited to be back in the studio with Chris Lattner from Mojo / Modular. Welcome back.

Chris: Yeah, I'm super excited to be here. As you know, I'm a huge fan of both of you and also the podcast and a lot of what's going on in the industry. So thank you for having me.

Swyx: Thanks for keeping us involved in your journey. And I think like you spend a lot of time writing when obviously your time is super valuable. Educating the rest of the world. I just saw your like a two and a half hour workshop with the GPU mode guys. And that was super exciting. We're decked out in your swag. We'll get to the part where you are a personal human machine of just productivity and you do so much. And, you know, I think there's a lot to learn. And for people just on a personal level. But I think a lot of people are going to be here just for the state of modular. We're also calling it the shape of compute. I think it's going to be probably the podcast title.

Chris: Yeah, it's super exciting. I mean, there's so much going on in the industry with hardware and software and just innovation everywhere. Most people can catch up on like the first episode that we did and we introduced modular. I think people want to know, like, I think since then you sort of open sourced it. There's been a lot of updates. What would you highlight as like the past year or so of updates? Yeah. So if you zoom out and say, what is modular? Yeah. So we're a company that's just over three years old. Three and a quarter. So we're quite a ways in. The first three years was a very mysterious time to a lot of people because I didn't want people to really use our stuff. And so why that? Why do you build something that you don't want people to use? Well, it's because we're figuring it all out. And so the way I explain is we were very much in a research phase. And so we were trying to solve this really hard problem of how do we unlock heterogeneous compute? How do we enable GP programming to be way easier? How do we enable innovation that's full stack across the AI stack by making it way simpler and driving out complexity? Like there are these core questions. And I had a lot of hypotheses, right? But building an alternate AI stack that is not as good as the existing one isn't very useful because people will always evaluate it against state of the art. And at that point, you say, okay, well, let's just break it down like a normal engineering problem. It's not R&D anymore. It's an engineering problem. You say, okay, cool. Let's refactor these APIs. Let's deprecate this thing. Let's, oh yeah, let's add H100 support. Let's add function calling and token sampling and like all the different things you need. You can project manage that, right? And so every six weeks, we've been shipping a new release. And so we added all the function calling features. And now you have agentic workflows. We have 500 models. We have H100 support. We're about to launch our AMD MI300 and 325 support. That'll be a big deal for the industry. And as you do that in Blackwell, like all this stuff is like all now in the product. And so as this happens now, suddenly it's like, oh, okay, I get it. But this is a very fundamentally different phase for us because it works, right? And once it works, you can see it end to end. Lots of people can put the pieces together in their brain. It's not just in my brain. And a few other people that understand how all the different individual pieces work. Or now everybody can see it. So as we face shift now, suddenly it's like, yeah, okay, let's open source it. Well, now we want more people involved. Now let's do each of these things. It's actually, we had a hackathon. And so we invited 100 people to come visit and spend the day with us. And we learned GPU programming from scratch. And so, you know, we built a very fancy inference framer. You know, the winning team for the hackathon took that four person team. And one day they had not used Mojo before. They hadn't programmed GPUs before. And they had built a training. They wrote an Adam Optimizer, a bunch of training kernels, they built a simple backprop system and they actually showed that you could train a model using all the stuff we'd built for inference because it's so hackable and also because the AI coding tools are awesome, but, but this is the power of what you can do when, when you're ready to scale. Now, if we had done that six months ago or 12 months ago or something, it would have been a huge mess, right? Because everything would break and there are a lot of bugs and, you know, honestly, today it's still an early state system. There's still some bugs, but now it's useful. It can solve real world problems. And so that's, that's the difference. And that's kind of the evolution that we've gone through as a team.

From CPU Optimization to GPU Support

Alessio [00:06:55]: I remember when we first had you at the start, you were focused on CPU actually. How long did you work on CPU and then how much of a jump was it to go from CPU to GPU?

Chris [00:07:06]: So, so the way I would, the way I explain modular is if you take that first three year R&D journey, and if you round a little bit, first year was, um, prove compilation philosophy. And so this was writing. Very. Very abstract compiler stuff and then prove that we can make a matrix multiplication go faster than Intel MKL's matrix multiplication on Intel silicon and make it configurable and multiple D types and prove like a very narrow problem and do that by writing this MLIR compiler representation directly by hand, which was really horrible. But we proved the technology and some technology milestone year two was then say, okay, cool. I believe the, the fundamental approach can work, but guess what? Usability is terrible. Writing internal compiler stuff by hand sucks. And also Matt Moles a long ways from an AI framework. And so year two embarked on two paths. One is Mojo. So program language syntax, member of the Python family, make it much more accessible and easy to write kernels and performance and all that kind of stuff. And then second, build an AI framework for CPUs, as you say, where you could go beat OpenVINO and things like this on Intel CPUs. End of year two, we, we got in, we're like, foof, we've achieved this amazing thing, but you know, it's cool. GPUs. And so again, two things we said, okay, well, let's prove we could do GPUs. Number one, also let's not just build a, you know, air quotes, a CUDA replacement. Let's actually show that we could do something useful. Let's take on LLM serving. No big deal. Right. And so again, two things where you say, let's go prove that we can do a thing, but then validate it against a really hard benchmark. And then that was, that's what, what brought us to year three. Yep. So in each of these stages is really hard and lots of interesting technical problems. Um, probably the biggest problem that you face. So you face people that are constantly telling you it's impossible, but again, you just have to be a little bit stubborn and believe in yourself and work hard and stay focused on milestones. When they say impossible, do they mean impossible or very, very hard? Well, so, I mean, it's, it's common sense that CUDA is nearly 20 years old and VIA's got hundreds or thousands of people working on it. The entire world's been writing CUDA code for many years, very, very hard. And so it's, no, I mean, many people think it's impossible for a startup to do anything in the space. Yeah. Like that's just common sense. I, all these people have thrown all this money at all. All these different systems, they've all failed. Why is your thing going to succeed when all these other things built by other smart people have failed? Right. And so it's conventional wisdom that change is impossible, but Hey, we're an AI, you know, this like changes all around us all the time. Right. And so what you need to do is you need to map out what are the success criteria, what causes change to actually work. And across my career, like with LLVM, all the GCC people told me it was impossible. Like LLVM will fail because GCC is 20 years old and it's had hundreds of people working on it and blah, blah, blah. And spec benchmarks. And whatever, nobody told me it was impossible outside because it was secret. So that was a little bit different, but everybody inside Apple that knew about it said, no, no, no objective C is fine. Like we should just improve objective C. The world doesn't need new programming languages that new programming languages never get adopted. Yeah. Right. And, and it's common sense that like new programming languages don't go anywhere. That's conventional wisdom. You know, MLIR super funny. MLIR is another compiler thing. And so built this thing, brought it to the LLVM community and said, Hey, we open sources. Does LLVM work? I want to it. I know a few LLVM people, right? It was my PhD project and all the LLVM Illuminati in the community had been working on LLVM for 15 years or something. They're like, no, no, LLVM is good enough. We don't need a new thing. Machine learning is not that important. And so again, obviously have developed a few skills to work through this kind of challenge, but you get back to the reality that humans don't like change. And when you do have change, it takes time to diffuse into the ecosystem. For people to process it. And this is where we talk about hackathon. Well, you kind of have to teach people about new things. And so this is why it's like really important to take time to do that. And the blog post series and things like this are all kind of part of this like education campaign, because none of this stuff is actually impossible. It's just really hard work and requires an elite team and good vision and guidance and stuff like this. But but it's understandably conventional wisdom that it's impossible to do this.

MAX: Modular’s Inference Framework

Alessio [00:11:14]: And it's the idea to build serving basically. I don't need to tell you how much better this is. I can just actually serve the models using our platform and then you can see how much faster it is. And then obviously you'll adopt it. Yeah.

Chris [00:11:27]: Well, so today you can download MAX, it's available for free. You can scale it to thousands of GPUs you want for free. I'll tell you some cool things about it. So it's not as good as something like VLLM because it's missing some features and it only supports NVIDIA and AMD hardware, for example. But by the way, the container is like a gigabyte. Wow. Right. Well, why is it a gigabyte? Well, it's a completely new stack. It doesn't need. You can use CUDA, you can run arbitrary PyTorch models. And if that's the case, then OK, you pull in some dependencies. But like if you're running the common LLMs and the Gen AI models that people really care about. Well, guess what? It's a completely native stack. It's super efficient. Doesn't have Python in the loop. And for eager mode op dispatch and stuff like this, because you don't have all that dependency, guess what? Your server starts up really fast. So if you care about horizontal auto scaling, that's actually pretty cool. If you care about reliability, it's pretty cool. They don't have all these weird things that are stacked in there. If you're wanting to do something slightly custom, guess what? You have full control over everything and stuff's all open source. And so you can go hack it. This thing's more open source than VLM because VLM depends on all these crazy binary CUDA kernels and stuff like this that are just opaque blobs from NVIDIA. Right. And so this is a very different world. And so I don't want everybody just like overnight drop VLM because I think it's a great project. Right. But but I think there's some interesting things here and there's specific reasons that it's interesting to certain people.

Alessio [00:12:47]: Can you just maybe run people through the pieces of MAX because I think last time none of this existed.

Mojo Programming Language

Chris [00:12:52]: Yeah, exactly. Last time has been a long time ago, both in AI and also in modular time. Yeah. So the bottom of our stack is we think about it as concentric circles. So the inside is a programming programming language called Mojo. Chris, why did you have to build another programming language? Well, the answer is that none of the existing ones actually solve the problem. So what's the problem? Well, the problem is that all of compute is now accelerated. Yeah. You have GPUs, you have TPUs, you have all these chips, you have CPUs also. And so we need a programming language that can scale across this. And so if you go shopping and you look at that, like the best thing ish is C++. Like there are things like OpenCL and SYCL and there's like a million things coming out of the HPC community that have tried to scale across different kinds of hardware. Let me be the first to tell you, and I can say this now, I feel I feel comfortable saying this, that C++ sucks. I've earned that right. Having written so much. And so and let me also claim AI people generally don't love C++. What do they love? They love Python. Right. And so what we decided to do is say, OK, well, even and even within the space of C++, there isn't an actually good unifying thing that can talk to tensor cores and things like this. And so we said, OK, I want again, Chris being unreasonable, I want something that can expose the full power of the hardware, not just one chip coming from one vendor, but the full power of any chip. It has to support the next level of very fancy compilers and other stuff like this with graph compilers and stuff like that needs to be portable. So portable across vendors and enable portable code. So, yeah, it turns out that an H100 and AMD chip are actually quite different. That is true. But there's a lot that you can reuse across that. And so the more common code that you can have, the better. The other piece is usability. And so we want something people can actually use and actually learn. And so it's not good enough. Yeah. Just to have Python syntax. You want to have performance and control and again, full power of the hardware. And so this is where Mojo came from. And so today, Mojo is really useful for two things. And so Mojo will grow over time. But today I recommend you use it for places you care about performance. So something running on GPU, for example, or really high performance, doing continuous batching within a web server or something like this where you have to do fancy hashing. And like if you care about performance, Mojo is a good thing. Other cool thing that we're about to talk about. So stay tuned is it's the best way to extend Python. And so if you have a big blob of Python code, you care about performance, you want to get the performance part out of Python. You can make it. We make it super easy to move that to Mojo. Mojo is not just a little faster than Python. It's faster than Rust. So it's like tens of thousands of times faster than Python. And it's in the Python family. And so you can literally just like rip out some for loops, put in Mojo, and now you get performance wins. And then you can start improving the code in place. You can offload it to GPU. You can do this stuff. And all the packaging is super simple. And it's just a beautiful way to make Python code go fast.

Swyx: Just to double-click, you said you were about to ship this?

Chris: It's technically in our nightlies, but we haven't actually announced it yet. Isn't it? Okay. We do a lot of that, by the way, because we're very developer-centric. And so if you join our Discord or Discourse. Nightlies is great. It's like the best program. Yeah. And so we have a ton of stuff that's kind of unannounced but well-known in the community. Okay. I thought this was already released. Yeah. Yeah.

Alessio [00:16:12]: And when you say rip out, is this, you have a Python binding to then run the Mojo? Like, you have C bindings or how does that work?

Chris [00:16:19]: This is the cool thing, is it's binding-free. So think about, like, so today, again, sorry, I get excited about this. I forget how tragic the world is outside of our walls. The thing we're competing with is if you have a big blob of Python code, which a lot of us do, you build it, build it, build it, build it, performance becomes a problem. Okay. What do you do? Well, you have a couple of different things. You can say, I'm going to rewrite my entire application in Rust or something, right? Some people do that. The other thing you can do is you can say, okay, I'm going to use Pybind or Nanobind or some Rust thingy and rewrite a part of my module, the performance-critical part. And now I have to have this binding logic and this build system goop and all this complexity around I have Rust code over here and I have Python code over here. Oh, by the way, you now have to hire people who can work on Rust and Python and like that. Right. So this fragments your team. It's very difficult to hire Rust people. I love them, but there's just too few of them. And so what we're doing is we're saying, okay, well, let's keep the language that's basically the same. So Mojo doesn't have all the features that Python does. Notably, Mojo doesn't have classes. But it has functions. Yeah, you can use arbitrary Python objects. You can get this binding-free experience and it's very similar. And so you can look at this as being like a super fast, slightly more limited Python. And now it's cohesive within the ecosystem. Yeah. And so I think this is useful way beyond just the AI ecosystem. I think this is a pretty cool thing for Python in general. But now you bring in GPUs and you say like, okay, well, I can take your code and you can make it real fast. Because CPUs have lots of fancy features like their own tensor cores and SIMD and all this stuff. Then you can put it on a GPU. And then you can put it on eight GPUs. And so you can keep going. And this is something that Rust can't do. Right. You can't put Rust on a GPU, really. Right. And things like this. And this is part of this new wave of technology that we're bringing into the world. And also, I'm sorry, I get excited about the stuff we've shipped and what we're about to ship. But this fall is going to be even more fun. Okay. So stay tuned. There may be more hardware beyond AMD and NVIDIA. Nice.

MAX Architecture: From Mojo to Cluster-Scale Inference

Swyx [00:18:25]: So that was Mojo. Yep. The first concentric circle. Yeah.

Chris [00:18:28]: Thank you for keeping me on track. So the inner circle is the programming language, right? And so it's a good way to make stuff go fast. And next level out is you say, okay, well, you know what's cool? AI. Have I convinced you? And so if you get into the world of AI, you start thinking about models. And beyond models, now we have Gen AI. And so you have things like pipelines, right? And the entire pipeline where you have KV cache orchestration and you have stateful batching. And I mean, you guys are the experts, agentic everything and all this kind of stuff. And so next level out is a very simple Gen AI inference focused framework we call Max. And so Max has a serving component. Is it Max Engine? Yeah. Yeah. Okay. There's different sections. We just call it Max. We got too complicated with sub-brands. This is also part of our R&D on branding is that- HBO has the same problem. Yeah, exactly. Well, and also like who named LLVM? I mean, what the heck is that? Right? So- Honestly, it's short. It's Google-able. Not the worst. Yeah. And VLLM came and decided to mess with it. Yeah. Yeah. No. So Max, the way to think about it is it's not a PyTorch. That's not what it wants to be, but it's really focused on inference. It's really focused on performance and control and latency. And if you want to be able to write something that then gets Python out of the loop of the model logic, like it's really good for control. And so it dovetails and is designed to work directly with Mojo. And so within a lot of LLVM, there's LLM applications. As you all know, there's a lot of very customized GPU kernels. And so you have a lot of crazy forms of attention, like the deep seek things that just came out and like all this stuff is always changing. And so a lot of those are custom kernels. But then you have a graph level that's outside of it. And the way that has always worked is you have, for example, CUDA or things like Tritonlang or things like this on the inside, and then you have Python on the outside. We've embraced that model, like don't fix what ain't broken. So we use straight Python for the model level. And so we have an API. It's very simple. It's not, it's not like designed to be fancy, but it feels kind of like a very simple PyTorch. And you can say, give me an attention block, give me these things, like configure out these ops, but it directly integrates with Mojo. And so now it's, you get full integration. And in a way that you can't get because none of the other frameworks and things like this can see into the code that you're running. And so this means that you get things like automatic kernel fusion. What's that? Well, that's a very fancy compiler technology that allows you to say, okay, you write one version of a flash attention and then cool. We can auto fuse in and so you, and the other activation functions that you might want to use, and you don't have to write all the permutations of these kernels, but that just means you're more productive. That means you get better performance. I mean, it's like a lot of things. It's just drives down complexity in the system. And so you shouldn't have to know there's a fancy compiler. Everybody should hate compilers. Like the only reason people should know about compilers is if they're breaking, right? And so it just feels like a, a very nice, very ergonomic and efficient way to like build custom models and customize existing models and things like this. And so with max, we have five, 600 very common model families and, and implemented that you can see a build stop modular.com. We have a whole bunch of models. You can scroll through them. You can get the source code and play with them and do that. That's actually really great for people that care about serving and research and, and, and all this, this kind of stuff. Next layer out is you say, okay, well you have a very fancy, uh, way to do serving on a single node. That's pretty useful and pretty important, but you know, it's actually cool, large scale deployment. And so we have a next level out, level out cluster level. And so that's the, okay, cool. I have a Kubernetes cluster. I have got a platform team. They've, uh, got a three-year commit on. 300 GPUs. And now I have product teams. I want to throw workloads against this shared pool of compute and the folks carrying the pagers want the product teams to behave and so they want to keep track of what's actually happening. And so that's the cluster level that goes out and each of the, and so very fancy, um, prefix caching on a per node basis. And then you have intelligent routing and there's a whole bunch of, uh, disaggregated pre-fill and like a whole bunch of cool technologies at each of these layers. But the cool thing about it is that they're all co-designed. Yup. And because the inside is heterogeneous, you can say, Hey, I have some in AMD, I have some Nvidia. Hey, I throw a model on there, run it on the best architecture. Well, this actually simplifies a lot of the management problems. And so a lot of the complexity that we've all internalized as being inherent to AI is actually really a consequence of these systems that are being designed together. And so to me, what, you know, my number one goal right now is to drive out complexity, both of our stack, cause we do have some tech debt that we're still fixing, but, um, but of AI in general and AI in general has way more tech debt. And then it deserves.

Swyx: Well, yeah. I mean, there's only so much that most people excluding you can do to comprehend their part of the stack and optimize it. I'm curious if you have any views or insider takes on what's happening with VLLM versus SGLang and everything coming out of Berkeley.

Chris Yeah, I don't, I have outsider perspective. I don't have any inside knowledge. Um, SG Lang seems to me as an outsider, so I'm not directly involved with either community. Sure. Um, so I can be a fan boy without, without participating, but, um, as she, they have a beef going on. So I see, I don't know the politics and drama, but SG Lang to me seems like a very focused team that has specific objectives and things they want to prove. And they're executing really hard towards a specific set of goals, much like the modular team. Right. And so that's, so I think there's some kindred spirits there. VLM seems much more like a massive community with a lot of stakeholders, a lot of stuff going on and it's kind of a hot mess. And so. But they want to be like the default inference platform that everyone kind of benchmarks against. Well, and, and I mean, it's, as far as I know, it's like crushing TRT LLM and some of the other older systems and things like that. And so I think like metrics are good probably for that. And so I can't speak to their ambition, but, but it seems structurally like they're very different approaches. One is say, let's be really good at a small number of things that are really important. Yeah. That's our, that's our approach too. One is saying, let's just say yes to lots of things. And then we'll have lots of things and some of them will work and some of them don't. One of the challenges I hear constantly about VLM for what it's worth is if you go to go to the webpage, they say, yeah, we support all of this hardware. We support Google TPUs and Inferentia and AMD and Nvidia, obviously, and CPUs and this and that. And the other thing is they have this huge, huge list of stuff that they, they support. But then you go and you try to follow any demo on some random webpage saying, here's how you do something with VLM. And if you do it on a non-NVIDIA piece of hardware, it fails. And so what is the value of something like VLM? Well, the value is you want to build on top of it. Generally, you don't want to be obsessing about the internals of it. If you have something that, you know, advertises that it works and then you pick it up and it doesn't work and I have to debug it. It's kind of a betrayal of the goal. And so to me, again, I know how hard it is. I know the fundamental reason why they're trying to build on top of a bunch of stuff below them that they didn't invent that doesn't work very well, honestly. Right. Cause we know that the hardware is hard and software for hardware is even harder these days. So I understand why that is, but, but that approach of saying, here's all the things we can do. And then having a sparse matrix of things that actually work is very different than the conservative approach, which is saying, okay, well we do a small number of things really well and you can rely on it. And so I can't say to which one's better. I mean, I appreciate them both and they both have great ideas. And the fact that there's the competition is good for everybody in the industry. It is. And so. They're in the benchmark wars state of the way that this industry evolves. That's right. But, but, but I also hear just on the enterprise side of things that they're all so good. They don't want to follow the drama. Right. And so like, this is where having less chaos and more, I can work with somebody is actually really valuable these days. And, and you need state of the art, you need performance and things like this, but, but actually having somebody that is executing well and works together, can work with you is also super important.

Swyx Have we rounded out the offerings? You talked about Max. Yeah. Yeah. I realized like it impresses me that you named the company Modular and you've designed all these things in a very modular way. Yeah. I'm wondering if there are any sacrifices to that or is modular everything the right approach? Like there's no trade-offs.

Chris If you allow me to show my old age, like my, my obsession with modular design came from back when creating LLVM. Okay. So at the time there was GCC. GCC is again, I love it as a C and C++ compiler and stuff. Right. And have tons of respect for it, but it's a monolith. It was designed from the old school Unix, like, like compilers are Unix pipe. Everything's global variables, C code. It was written in KNRC. If you even know what that is anymore. And so it was from a different epoch of software and LLVM came and said, you know what everybody teaches you in school is you have a front end and optimizer and a code generator. Let's make that clean. Right. And so at the time people told me again, first of all, it's impossible to replace GCC because so established, et cetera, et cetera. But then also that you can't have clean design because look at what GCC did and it's successful. And therefore you can't have performance or good support for hardware or whatever it is without that. They might be right. If in an infinitely perfect universe, but we don't live in an infinitely perfect universe. Instead, what we live in is a universe where you have teams, like you have people that are really good at writing. Parsers, people that are really good at writing optimizers, people that are really good at writing a code generator for x86 or something like that. And so these are very different skill sets. Also, as like we see in AI, the requirements change all the time. And so if you write a monolith that is super hard code and hack today, well, two years from now is going to be relevant. And this is why what we see a lot in AI is we see these like really cool and very promising systems that rise and grow very rapidly. And then they end up falling. It's because they're almost disposable frameworks. This concept really comes from... The language is a framework. Each of these systems end up being different. But what I believe in very strongly is that in AI, we want progress to go faster, not slower. That's controversial because it's already going so fast, right? But if we can accelerate things, we get more product value into our lives. We get more impact. We get all the good things that come with AI. I'm a maximalist, by the way. But with that comes... The reality is that everything will change and break. And so if you have modularity, if you have clean architecture, you can evolve and change the design. It's not over-specialized for a specific use case. The challenge is you have to set your baseline and metrics right. This is why it's like compare against the best in the industry, right? And so you can't say, or at least it's unfulfilling to me to say, let's build something that's 80% as good as the best. But it's got this other benefit. I want to be best of in the categories we care about.

Open Source Contributions and Community Involvement

Alessio [00:29:16]: And when it comes to using like a prefix caching, pay-per-use, etc. Paged attention. How do you decide what you want to innovate on versus like, hey, you are actually the team that has built the best thing. We're just going to go ahead and use that. Yeah.

Chris [00:29:28]: I'm very shameless about using good ideas from wherever they come. Like everything's a remix, right? And so if somebody, if I don't care who it is, if it's Nvidia, if it's SGLang or VLM, and if somebody has a good idea, let's pull it together. But the key thing is make it composable and orthogonal and flexible and expressive. To me, what I look at is not just the things that people have done and put together. I don't put it into VLM, for example, but the continuous stream of archive papers, right? And so I follow, you know, there's a very vibrant industry around inference research. It used to be it was just training research, right? And much of that never gets into these standard frameworks, right? And the reason for that is you have to write massively hand-coded CUDA kernels and all this stuff for any new thing. And I would want one new D type and I have to change everything because nothing composes. Mm-hmm. And so this is, again, where if you get some of these software architecture things right, I mean, which admittedly requires you to invent a new programming language. There's a few hard parts to this problem, but the cool thing about that is you can move way faster. And so I'll give you an example of that. This is fully public because not only do we open source our thing, we open source all the version control history. And so you can go back in time and say, like, Chris likes open source software, by the way. Let me convince you of this. I don't like people meddling in my stuff too early. But I like open source software. And so you can go look at how we brought up H100, built Flash Attention from scratch in a few weeks, built, like, all this stuff. We're beating the TreeDAO reference implementation that everybody uses, for example, right? Written fully in Mojo. Again, all of our GPU kernels are written in Mojo. You can go see the history of the team building this, and it was done in just a few weeks, right? And so we brought up H100, entirely new GPU architecture. If you're familiar, it has very fancy asynchronous features and tensor memory accelerator things. And, like, all this goofy stuff they added, which is really critical for performance. And again, our goal is meet and beat QDNN and TRTLM and these things. And so it's not, you can't just do a quick path to success. You have to get everything right and everything to line up. Because any little thing being wrong nerfs performance. And we did that in, I think it was less than two months. Public on GitHub, right? And so, like, that velocity is just incredible. I think it took nine months or 12 months to invent Flash Attention. And it took another six months to get into VLM. And this is just, like, that latter part is just integration work, right? And so now you're talking about, like, building all this stuff from scratch in a composable way that scales against other architectures and has these advantages. It's just a different level. So anyways, our stuff's still early in many ways. And so we're missing features. But if you're interested in what we're doing, you can totally follow the changelogs. And you can, like, follow them nightly where we publish all the cool stuff and all the kernels are public. And so you can see and contribute to it. And you can do this as well if you're interested.

Alessio [00:32:19]: Yeah. Do you have any requests for projects that people should take on outside of modular that you don't want to bring in?

Modular's Differentiation from VLLM and SGLang

Chris [00:32:25]: Absolutely. So we're a small team. I mean, we're over 100 people. But compared to the size of the problem we're taking on. Size of your ambitions. Yeah. We're infinitesimal compared to the size of the AI industry, right? And so this is where, for example, we didn't really care about Turing support. Turing's an older GPU architecture. And so somebody on the community is like, okay, I'll enable a new GPU architecture too. So they contributed Turing support. Now you can use Max and Colab for free. That's pretty cool. There's a bunch of operators. So we're very focused on AI and Gen AI and things like this. By the way, our stuff isn't AI specific. So people have written ray tracers and there's people doing flight safety and, like, all kinds of weird things. Flight simulation? At our hackathon, somebody made a demo of, like, looking at, I think it was a voice transcript, the black box type traffic. And then predicting when the pilot had made a mistake. And the airplane was going to have a big problem. And predicting that with high confidence. So it's like your car, you know, you're driving your car until it starts beeping when you're not slowing down. It's like lane assist. Yeah, yeah, yeah. Okay, okay. That kind of thing. But for the FAA. Wow. And stuff like this, right? And I know nothing about that. Like, this is not my domain. Trust me. This is the power of there's so many people in our industry that are almost infinitely smart, it feels like. They're way smarter than I am in most ways. And you give them tools and you enable things. And also you have AI coding tools and things like this that help, like, bridge the gap. I think we're going to have so many more products. This is really what motivates me, right? And this is where I think we talked about last time. One of the things that really frustrated me years ago and inspired me to start Modular in the first place is that I saw what the trillion-dollar companies could do. Right? You look at the biggest labs with all the smart people that built all the stuff vertically top to bottom. And they could do incredible product and research and other work. And that, you know, nobody with a five-person team or a startup or something could afford to do. And here we're not even talking about the compute. We're just talking about the talent. Well, and the reason for that is just all the complexity. It only worked, for example, at Google because you could walk, proverbially walk down the hallway, tap on somebody's shoulder and say, hey, your stuff doesn't work. How do I get it to work? I'm off of the happy path. Like, how do I get this new thing to work? And they'll say, I'll hack it for you. Right? Well, that doesn't work at scale. Like, we need things that compose right, that are simple, that you can understand, that you can cross boundaries. And, you know, so much of AI is a team sport. Right? And we want it so more people can participate and grow and learn. And if you do that, then I think you get, again, more product innovation and less just like gigantic AI will solve all problems kind of solutions and more fine-grained, purpose-built, product-integrated AI. Yeah. I think one way of phrasing what you're doing is, you know, you have this line about it's, you want AI to accelerate and that's contrarian because it's already fast and people are already uncomfortable. But I think you're more, you're accelerating distribution. I have mixed feelings about the words democratizing, but that's really what you're doing. Well, it used to be, democratizing AI used to be the cool thing back in 2017. I know, it's not cool anymore. Yeah, I know. But it used to be the cool thing. And what it meant and what it came to mean is democratizing model training. Right? And it's super interesting. Again, as veterans, like back in 2017, AI was about the research because nobody knew both what the product applications were, but then we didn't know how to train models. And so things like that. Things like PyTorch came on the scene. And I think PyTorch gets all credit for democratizing model training, right? It's taught to pretty much every computer science student that graduates. That's a huge deal, but nobody democratized inference. Inference always remained a black art, right? And so this is why we have things like VLM and SG Lang because they're the black box that you can just hopefully build on top of and not have to know how any of that scary stuff works. It's because we haven't taught the industry how to do this stuff. And, you know, it'll take time. But I think that that's not an issue. It's not an inherent problem. I think it's just that we don't have like a PyTorch for inference or something like that. And so as we start making this stuff easier and breaking it down, we can get a lot more diffusion of good ideas. I want to double click a little bit more on some technical things. But just to sidetrack on, you know, VLM is an open source project led by academics and all that. And I think a lot of the other inference team, because effectively every team is a startup, like the fireworks together, you know, all those guys. Your business model is very different from them. And I want to spend a little bit of time on that. Happy to talk about it. You intentionally, well, you believe in open source, but, you know, it's not just that. You just choose not to make money from a lot of the normal sort of hosted cloud offerings that everyone else does. Yeah. There's a philosophical reason and differentiation. There's a whole bunch of reasons. Yeah. So like maybe remind people of what that is, like how basically how they pay you money and what they get for that and why you pick that. So, again, we're doing this in hard mode. Right. We took a long path to product. We're not just right away. We're going to get a few kernels and have some alpha and go buy a GPU reserve thing and resell our GPUs like that. That path has been picked by many companies and they're really good at it. So that's not a contribution that I'm very good at. And I'm not going to go build a data center for you like this. There's people that are way, way better than that. Okay. And so all the best luck. I want that. Literally Crusoe like walking the Stargate grounds. I want those people to use Macs. You know, I'm pretty good. That's a Crusoe. Yeah. I'm pretty good at this software thing. And so you can handle all the compute. Moreover, if you get out of startups, you have a lot of people that are struggling with GPUs and cloud. Right. And so GPUs and cloud fundamentally are a different thing than CPUs and cloud. And a lot of people walk up to it and say, it's all just cloud. Right. But let me convince you that's not true. And so, first of all, CPUs and cloud. Why was that awesome? Well, all the workloads were stateless. They all could horizontally auto scale. CPUs are comparatively cheap. And so you get elasticity. That's really cool. You can load them pretty quickly. Yeah. It's not like gigabytes of weights. Yeah. And so it turns out what business, you know, knows what they're doing in two and a half years? Nobody. Nobody. Right. And so cloud for CPUs is incredibly valuable because you don't have to capacity plan that far out. Right. Now you fast forward to GPUs. Well, now you have to get a three year commit. Three years. Right. So you have a three year commit on a piece of hardware that Jensen's going to make obsolete in a year. Right. And so now you get this thing. And so you make some big commit. And what do you do with it? Well, you have to overcommit because you don't know what your needs are going to be and you're not ready to do this. Also, all the tech is super complicated and scary. Also, GPU workloads are stateful. And so you talk about like the fancy agentic stuff and you all know all this stuff. Yeah. It's stateful. And so now you don't get horizontal auto scaling. You don't get stateless elasticity. And so you get a huge management problem. And so what we think we can do and we can help with people is say, OK, well, let's give you power over your compute. And a lot of people have different systems and there's very simple systems that go into this. But you can get like five X performance TCO benefit by doing intelligent routing. That's actually a big deal for a platform team. They don't like to have to deal with this. They want hardware optionality to get to AMD. They want this kind of. Power and technology. And so we're very happy to work with those folks. The way I explain it in a simple way is that a lot of the endpoint companies and there's a lot of them out there. And so you can't make they're not all one thing. They obviously have pros and cons and tradeoffs. But but generally the value prop of an endpoint is to say, look, AI and AI software and applications and workloads. It's all a hot mess. It's too complicated. Don't worry your little head about it. I'll take AI off your plate so that you don't have to worry about it. We'll take care of all the complexity for you. And it'll be easy. Just talk to our endpoint. Our approach is to say, okay, well, guess what? It's all a hot mess. Yes, 100%. Like, it's horrible. It's worse than you probably even know. And get to tomorrow. It's going to be even worse because everything keeps changing. We'll make it easy. We'll give you power. We'll give you superpowers for your enterprise and your team. Because every CEO that I talk to not only wants to have AI in their products, they want their team to upskill in AI. And so we don't take AI away from the enterprise. We give power over AI. We give power back to their team and allow them to both have an easy experience to get started. Because a lot of people do want to run standard commodity models and you do want stuff to just work as table stakes. But then when they say, hey, I want to actually fine-tune it, well, I don't want to give my proprietary data to some other startup or even some big startups out there. And you know whose these are. That's my proprietary IP. And then you get to people who say, hey, I have a fancy data model. I actually have data scientists. I actually have a few GPUs. I'm going to train my model. Cool. That got democratized. Now how do I deploy it? Right? Well, again, you get back into hacking the internals of VLM. And PyTorch isn't really designed for KV cache optimizations and all the modern transformer features and things like this. And so suddenly you fall off this complexity cliff if you care about it being good. And so we say, okay, well, yeah, this is another step in complexity. But you can own this and you can scale this. And so we can help you with that. And so it's a different trade-off in the space. But I will admit that there are time to market and revenue growth and stuff like that. And that has been much faster because they didn't have to build an entire replacement for CUDA to get there.

Modular’s Business Model and Monetization Strategy

Alessio [00:41:37]: Nice. And when it comes to charging, people are buying this as a platform. It's not tied to token, inferred, anything like that.

Chris [00:41:45]: We have two things going on. So the Max framework and the Mojo language, free to use on NVIDIA and CPUs, any scale. Go nuts. Do whatever you want. Please send patches. It's free. Right. Why is this? Well, it turns out CUDA is already free. NVIDIA is already dominant in here. We want the technology to go far and wide. Use it for free. It would be great if you send us patches, but you don't even have to do that, right? We do ask you to allow us to use your logo on our webpage. And so send us an email and say, hey, you're using it and you're scanning on 10,000 GPUs. That'd be awesome. But that's the only requirement. If you want cluster management and you want enterprise support and you want things like this, then you can pay on a per GPU basis and you can contact our sales team. And then we can work out a deal and we can work with you directly. And so that's how we break this down. And also, let me say it. One thing I would love to see, and again, it's still early days, but I would love to see PyTorch adopt Macs. I'd love to see VLM adopt Macs. I'd love to see SGLang adopt a Macs. We have our own little serving thing, but go look at it. It's really simple. It would be amazing. And again, we're in the phase now where I do want people to actually use our stuff. And so we have historically been in the mode of like, no, our stuff's closed, stay away. But we're phase shifting right now. And so you'll see much more of this being announced. Yeah. I don't know how to make this happen, but I think you win when Mistral, Meta, DeepSeq, and Quen adopt you and ship you natively, right? How does that happen? I don't want to talk that far in the future. I don't think it's that far. We may have an industry-leading state-of-the-art model launching first on Mac soon. I'll stay tuned for that, but also let us know. But that hasn't happened, so assume it doesn't happen. I think that's when it really tips. Because then everyone's like, okay, it's good enough for them, it's good enough for us, right? And then you get the rest of the industry. Yeah. But again, I mean, I'm in it for a long game, right? And I realize, again, the stuff I work on, it takes time for people to process. And so what we need to do and what I want us to do and what I ask the team to do is keep making things better and better and better and better and better. And there's like an S-curve of technology adoption. And so I think it's great that there's a small number of crazy early adopters that were using our stuff in February. Before it was open source, and it made no rational sense. It barely worked. But it was amazing, and I'm very thankful for those people. And then, of course, we open sourced it, and we started teaching people, and you get a much bigger adoption curve. You make it free, go adopt and go. And then, as you say, there's more validation that will be coming soon. And each of these things is a knee in the curve. But what it also does is it gives us the ability to fix bugs and improve things and add more features and roll out new capabilities.

Swyx: How does this feel, rolling this out, as compared to software?

... [Content truncated due to size limits]