This is the full chapter on time, a building block of data modeling. I decided to publish the whole thing for continuity, as I also substantially edited the first part of the chapter. Time is often overlooked, and I want to give it sufficient and practical coverage. Here, I build the foundation of what you need to know about time that will help you with your data modeling. If you know how time is queried, you’ll have a better idea of how to model it.

I might add some other ways of querying time, but we’ll see. Let me know if there’s anything else you’d like to see.

Coming up - Meaning (semantics, ontologies, etc) as a building block, organizational considerations for data modeling, and more. I’m pulling together my scrawlings and notes, so expect around one new chapter per week.

Thanks,

Joe

Why Time Matters in Data Modeling

Time - “A finite extent or stretch of continued existence, as the interval separating two successive events or actions, or the period during which an action, condition, or state continues; a finite portion of time (in its infinite sense: see sense A.IV.34a); a period. Frequently with preceding modifying adjective, as a long time, a short time, etc.” - Oxford dictionary

In 2012, the Knight Capital Group1, one of the largest stock trading firms in the U.S., lost $440 million in less than an hour due to a software deployment that failed to retire old time-sensitive code properly. The issue? A legacy module that hadn’t been used in years was accidentally reactivated on some servers but not others. The module triggered automated trades at the wrong time, resulting in a flood of unintended orders being sent into the stock market. Knight bought high and sold low, spiraling into massive losses almost instantly. The system became confused by different types of time and lacked safeguards to prevent trades from occurring at the wrong times. By the time anyone noticed, the company was insolvent.

Poor handling of time has cratered many applications, analyses, and ML/AI models. Time is a top-priority concern for any data system, yet it remains one of the most nuanced and misunderstood aspects of our work. Reality isn’t static. Events happen, states change, and when we model data, we are almost always attempting to capture a faithful record of these changes over time.

In this chapter, we will build a comprehensive framework for working with time in data models. We'll start by defining the fundamental types of time and the concept of temporality for tracking history. From there, we'll cover the practical parts of representing and querying time.

Fundamental Types of Time

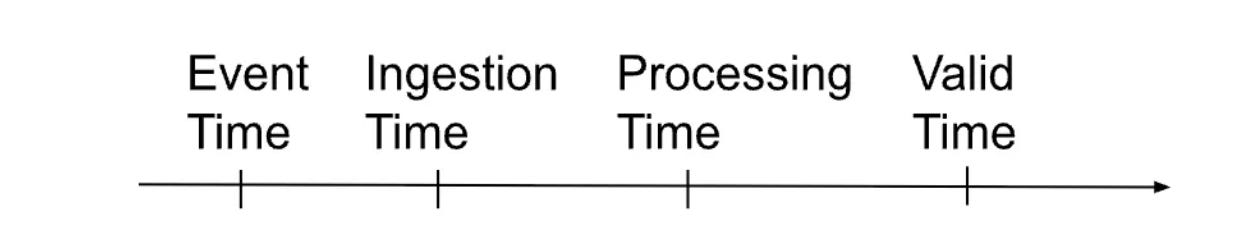

Time in data modeling isn’t a single concept, and it comes in several distinct flavors. Data is created, moving from place to place as it is consumed and transformed for various use cases. Each of these data movements occurs at different points in time. To keep things practical, let’s focus on four types most relevant to data modeling work: event time, ingestion time, processing time, and valid time. Here, we’re assuming data is generated and captured by one or more systems.

Figure x-y: Four major types of time in data modeling

Event time refers to when an event first occurs in the real world. It’s the timestamp associated with the actual occurrence of an event, such as a user clicking a link, a financial transaction, a sensor reading, or an inference made by an AI model. This timestamp is typically generated at the source and should be considered immutable, as it reflects a fact about the past that cannot change. Event time provides the proper chronological order of events, regardless of when they’re ingested, processed, or stored. In some contexts, event time may also be referred to as “actual time” or “real-world time.”