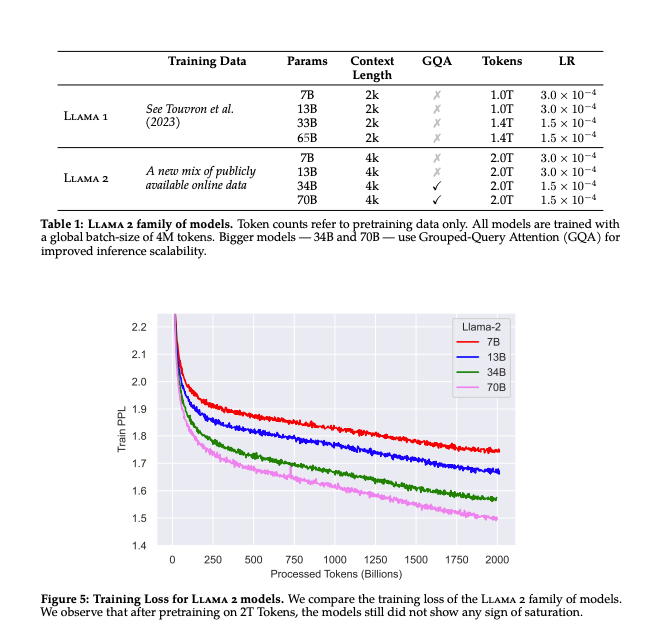

As first discussed on our May Emergency pod and leaked 4 days ago, Llama (renamed from LLaMA) was upgraded to Llama 2 (pretraining on 2 trillion tokens with 2x the context length - bigger than any dataset discussed in Datasets 101, and adding ~$20m of RLHF/preference annotation) and released for commercial use on 18 July.

It immediately displaced Falcon-40B as the leading open LLM and was immediately converted/quantized to GGML and other formats. Llama 2 seems to outperform all other open source models in their equivalent weight class:

Why are open models important? The intersection of Open Source and AI is one of the oldest themes on this publication, and there has been a raging debate on the security and reliability of the OpenAI models and APIs. Users have reported GPT-4’s quality going down, which has been denied and denied and as of today, given some supporting data from Databricks, and complained about the API reliability and rapid deprecation schedules. Last and surely the biggest, there are entire classes of businesses and government/healthcare/military organizations that categorically cannot send any of their sensitive data to an external API provider, even if it is OpenAI through Azure. The only way to have total control is to own and serve your own models, which Llama 2 now pushes forward in terms of the state of the art (your own GPT3.5-quality model, though it is nowhere near Claude 2 or GPT-4).

As we do with breaking news, we got on to Twitter Spaces again to chat with two scheduled guests:

* Nathan Lambert, ML Researcher at Huggingface and author of Interconnects who had the best summary of the Llama2 paper

* Matt Bornstein, organizer of the a16z infra team that launched Llama2.ai (source here) and has been coding up a storm with AI demo apps, unusual for VCs

as well as Anton Troynikov of Chroma, Russell Kaplan of Scale AI, and Omar Qazi of the Whole Mars Catalog.

Enjoy!

Show Notes

* Official links

* GitHub (Llama 2 commit)

* Use policy, Statement of Support for Open Approach

* Where to try

* Llama2.ai (source), Perplexity Llama Chat

* Live playground/API on Replicate, deploy all versions on Baseten

* https://huggingface.co/spaces/ysharma/Explore_llamav2_with_TGI

* Dev ports - simonw llm-replicate, ggml using llama.cpp (7B, 13B) or pinokio, ollama, Core ML port

* Timeline

* 24 Feb - LLaMA 1 announced

* 6 May - our No Moats podcast - first mention of Zuck opening up Llama

* 14 July - Llama 2 leaked

* 18 July - Llama 2 announced

* Community notes

* Nathan’s research paper recap

* Usage restrictions - MAU restriction, derivative models

* >$20m price tag (rlhf, jimfan),

* Separate models for safety and helpfulness (jimfan)

* Mistral AI founders left out of paper

* Interesting fails:

Timestamps

* [00:02:30] Introducing the speakers

* [00:03:32] Nathan Lambert intro

* [00:04:48] General Summary of Llama 2

* [00:05:57] Sarah Silverman killed Dataset Transparency?

* [00:08:48] Simon's Recap of Llama 2

* [00:11:43] Matt's Intro

* [00:12:59] a16z Infra's new AI team?

* [00:15:10] Alessio's recap of Llama 2

* [00:17:26] Datasets 101 Followup

* [00:18:14] Context Length 4k

* [00:20:35] Open-ish Source? Usage Policy and Restrictions

* [00:23:38] Huggingface Responsible AI License

* [00:24:57] Pretraining Llama 2 Base Model beyond Chinchilla

* [00:29:55] Llama 2 is incomplete? Race to publish

* [00:31:40] Come for the Llama, stay for the (Meta) drama

* [00:33:22] Language Translation

* [00:35:10] Llama2's coding abilities

* [00:35:59] Why we want to know about the training data

* [00:37:45] The importance of Meta pushing forward Truly Open AI

* [00:40:59] Llama 2 as Enabler of Startups

* [00:43:59] Where you can try Llama 2

* [00:44:25] Do you need dataset transparency if you have evals?

* [00:45:56] >$20m cost of Llama 2 is primarily preference data collection

* [00:48:59] Do we even need human annotators?

* [00:49:42] Models Rating Models

* [00:53:32] How to get Code preference data

* [00:54:34] Llama 2 Finetuning Ecosystem

* [00:56:32] Hey Apple: Llama2 on Metal pls

* [00:57:17] Llama 2 and Chroma

* [01:00:15] Open Source MoE model?

* [01:00:51] Llama 2 using tools

* [01:01:40] Russell Kaplan on Scale AI's Llama 2 plans

* [01:03:31] Scale annotating code?

* [01:04:36] Immortality

* [01:04:59] Running Llama on your phone

* [01:06:54] Sama <3 Satya <3 Zuck? "Azure as Launch Partner"

* [01:10:58] Meta "Open Source" Leadership

* [01:11:56] Prediction: Finetuning => New Use Cases from Internal State

* [01:13:54] Prediction: Llama Toolformer

* [01:14:39] Prediction: Finetune-for-everything

* [01:15:50] Predictions: Llama Agents

* [01:16:35] dP(Doom)?

* [01:19:21] Wrapping up

Transcript

[00:00:00] Introducing the speakers

[00:00:00] Alessio Fanelli: There's not a single dull day in this space. I think when we started the podcast in January, a lot of people asked us, how long can you really do this? Just focusing on AI research and, and models. And I think the, the answer is clear now. A long time. So excited for this and excited to have Simon again.

[00:00:16] You're basically a honorary guest host of all of our Twitter spaces. Cool. Thank you.

[00:00:21] Simon Willison: No, it's great to be here again.

[00:00:23] Alessio Fanelli: And Nathan, thanks for joining us. Actually share your your writeup on, on Lama two technical details with Swyx this morning. So it's great to to have you here to dive into some of the details.

[00:00:33] Nathan Lambert: Yeah, sounds good. As probably clear Huggingface was trying to collaborate on releasing the model on the platform. So we ended up getting some early details, which made it a lot easier for me to cram study before the chaos hit.

[00:00:48] Alessio Fanelli: No, that's great. It, it's kind of what happened with the code interpreter episode when Sean and I had access for about five hours and Simon was like, I've been playing with this for weeks and add all the, the insights scoops.

[00:00:59] So I think this will be a, a good episode.

[00:01:02] Nathan Lambert intro

[00:01:02] Alessio Fanelli: Maybe Nathan, you just want to give people a little bit of background on what you do at Hugging and Face and yeah, the, your experience with the LAMA two kinda preview. Yeah. So

[00:01:12] Nathan Lambert: I've been a researcher and helping lead reinforcement learning from human feedback efforts at Hugging and face, which really means I do some research and I try to figure out how to fine tune models to do what people want.

[00:01:26] Generally we're trying to operate in the scale a little bit smaller than what Meta is doing cuz we obviously don't have that kind of resources at a startup. So I do a lot of technical research and also try to actually engage and communicate that with the community and specifically, Llama, I think I was most interested on kind of the research side.

[00:01:48] I think the paper is a phenomenal artifact and it's clear that the model is really strong in a lot of areas. And then kind of the big picture trends of where open source is going. Like this is a clear step in a direction that a lot of people wanted, but weren't sure if it was gonna happen. Yep.

[00:02:04] Alessio Fanelli: What are some of the things that stood out to you?

[00:02:06] I think to a lot of the AI engineers audience that we have, they're not as deep into the details of the papers. We'd love to get a, a read from somebody like you who's a much deeper at a, you know, model research level.

[00:02:18] General Summary of Llama 2

[00:02:18] Nathan Lambert: Yeah. It's like, where do I start? So I think as a general summary, the paper includes a lot of details on methodology. So like, what are the things that they did in their stack to build, to actually run this? And it misses a lot of details on. What does a specific data set actually look like? It's clear that they have a really fine-tuned data set and they paid a lot of money for these data sets.

[00:02:46] I think may like, it seems like now that both surge and scale are claiming some part in it, which I find hilarious. Cause it's really unclear, which are two of the probably biggest data labeling firms. So they kind of took the approach, meta took the approach of starting with open source preference data and then added a lot onto it.

[00:03:04] And the most interesting part to me on this preference data, which is a new technical approach, is they trained two preference models, two reward models, one toward making the model helpful and one for making the model safe. And then in terms of open source models, it's clearly more performant on kind of ground root benchmarks and then it's safer.

[00:03:27] Sarah Silverman killed Dataset Transparency?

[00:03:27] swyx: That's where I was

[00:03:28] Simon Willison: gonna wrap up to clarify, right. This is a big difference from the first LAMA paper. Cause the first LAMA paper was very, was so detailed in terms of how the training data worked, that people were able to essentially replicate it. And so you're saying that this new paper, there's, there's much less transparency as to how the training worked

[00:03:45] Nathan Lambert: on the DIS side.

[00:03:46] Yeah, I think they, they did a lot of new methodological things to, so taking the time to explain that like is not as much of a data focused paper. There's no table that is like, this is what the distribution of pre-training data came from. I would guess that it's a similar data set to the original llama with the kind of, they mentioned like one of the details that's really interesting is that they mentioned they up weight high factuality content.

[00:04:14] So things that probably seem like Wikipedia, that seems like they're doing some sort of up ranking. During base model training, but they don't de, they did some type of thing they didn't detail

[00:04:24] swyx: because it's also

[00:04:25] Simon Willison: worth mentioning, I mean, they're being sued right now by Sarah Silverman of all people. I mean, it's one of the many lawsuits flying around, but there's a lawsuit specifically over the training data involved in the first Lama because one of the things that went into that was this data set called Books three and Books three is like 190,000 pirated eBooks, like the full text of all of the ha Harry bot novels, things like that.

[00:04:45] Which, yeah, that's very difficult to say that that's not extremely copyrighted data. So I wonder if that's part of the reason they've been less transparent this time round is that, you know, it got them in trouble last time.

[00:04:57] Nathan Lambert: Yeah. One of my colleagues on kind of the Ethics and Society time I side immediately pointed out that pub, publicly available data is the phrase often used in the paper, but that does not mean that it's free from copyright issues and or terms of service issues.

[00:05:11] It means that I could go on a computer and download it.

[00:05:13] Simon Willison: Right. If you, if you scrape the entire internet, very little of that stuff is actually like public domain.

[00:05:21] Nathan Lambert: Yeah. And, and I, I think without going down kind of social issues, rabbit hole right now, I think the notion of public is extremely being strained by AI and changing communication practices. And it's just like kind of those things where it's like, oh, okay, here we go.

[00:05:36] And they also use words like democratize and they have these sentences in the paper that are extremely value written, which is like the carbon footprint of our model. And releasing this is good because it'll mean a lot of people don't have to train models and burn more CO2 in the future. And it's like, okay, meta, like, like what?

[00:05:53] Where are you going with

[00:05:54] swyx: this? Yeah. Perhaps before we go too deep into the issues, cuz we, we have lots to talk about. I would also want to get a high level overview from Simon and from Matt who's also just joined us from a 16 and Z. So maybe Simon, you, you wanna go first with like, just recap for everybody what you think the relevant details are about LAMA two and, I mean, and we'll talk, we'll talk about Matt stuff.

[00:06:18] Simon's Recap of Llama 2

[00:06:18] swyx: Yeah.

[00:06:19] Simon Willison: So, yeah, I mean the, the, the, the headline here is that LAMA two has been released and meta kept their promise of doing a version of llama that is used, usable for commercial purposes, which is so big because so much of the, like, llama itself came out at the end of February, and so many models have been released on top of that.

[00:06:37] So, LA models like Vicuna, which was a fine tuned llama, all of them with the same, no, not, not usable for commercial purposes. Warning. So now we've got a really high quality foundation model that we are allowed to use commercially. I think the the amount of innovation we're gonna see over the next few weeks is, is just going to explode.

[00:06:54] You know, I feel like this is, this is monumental on that front in terms of quality. I never know how to interpret these benchmarks. The benchmarks all look good. You know, the claims are, it's a bit better than, than Lama it's competitor with the GP chat, GPT 3.5, et cetera, et cetera. I have no reason to disbelieve that, but it always takes quite a while with these new models to get a feel for them.

[00:07:13] You have to spend time with them to really feel like, is it trustworthy as a summarizer, all of those kinds of things. My, my hunch is that it is gonna do turn out to be extremely good. Like I, I, I doubt that it'll, it'll, it'll, it'll turn out to be sort of a damp squib on that front. But yeah, so they've released it.

[00:07:30] The It's available commercially and you are allowed to redistribute it, but the only way to officially get the waits is to fill in a form on their website and wait for them to approve you still, which is kind of stupid because obviously it's already started leaking. I've down, I downloaded a version onto my laptop this afternoon, which, which worked.

[00:07:47] There's a G G M L and the bloke thing that's floating around and hugging, hugging face already, so, you know, within. 24 to 48 hours. I think every possible version of this thing will be available to download without going through a waiting list. I'm almost not sure why they, why they even bother with that.

[00:08:03] Especially since, you know, llama leaked within I within a few days last time and somebody ended up submitting a pull request to the GitHub Readme with a link to the BitTorrent for the LAMA models, which Facebook didn't delete. You know, they didn't sort of, They, they kind of like nodded and winked and said, yeah, this is what you can do.

[00:08:20] And now it's even legitimately okay to do it because the license says you can. But anyway, it's out there. You can run it on your computer right now today. The it's also hosted in a bunch of places. Yeah Andrea Horowitz got that sponsored, the version of it that's available on Replicate, although you actually do have to pay for that.

[00:08:37] I noticed that I built up 26 cents in, in replicate charges already playing around with that model. But it's api, so, so it's available via API or you can run it on your own machine and, you know, it's, it's open season. That's all start, start poking around with it and seeing what it can do.

[00:08:52] swyx: It's open season.

[00:08:53] Speaking of Andreesen, yes, Matt. Hey.

[00:08:56] Matt Bornstein: Hey. Hey everyone. Thank you for having me. And Simon, if you wanna send me a Venmo request for 26 cents, I'll, I'll happily reimburse you.

[00:09:02] Simon Willison: Absolutely. Yeah.

[00:09:04] Matt Bornstein: We, we may lose about $3 on the transaction fee, but I think it'd be worth it

[00:09:09] swyx: just to throw in a term sheet in there for a data set.

[00:09:11] Nathan Lambert: You're good?

[00:09:13] Matt's Intro

[00:09:13] Matt Bornstein: No, I'm, I'm a huge data set fan. And, and, you know, we've, we've followed Simon's work for quite a while, and, and Nathan, it's, it's great to have a chance to share a stage with you. I think folks probably saw we you know, released a bunch of sort of, you know, VC version of evaluations. You know, we're way less smart than, you know, Nathan and Simon and a bunch of folks on the in the, in the space here.

[00:09:33] But using just sort of the. Does it feel good approach and trying to get a fairly representative sample across different types of prompts? The model seems very good. We were playing a lot with 13 B and we're playing now with 70 B, and it really does give you kind of very fast g p t 3.5 level responses to some questions.

[00:09:54] I, I think Simon's point about benchmarks is very well taken. It's hard to know how to interpret those. So, so we sort of go for the, for the direct version and for creative tasks. You know, especially it's, it, it seems very good so far. So, so a lot of what we're doing is just trying to get it out there as much as possible and, and, and as fast as possible.

[00:10:11] You know, I I think we should all be incredibly, you know, appreciative that Meta is doing this and it, and it's not, you know, maybe quite perfect, you know, for some of the reasons that folks are are talking about. But you know, I think it's gonna be a huge unlock in open source LLMs and, and we're trying to, you know, just sort of support the community as much as possible.

[00:10:29] a16z Infra's new AI team?

[00:10:29] swyx: Yeah, I have to say, you guys are doing a bang up job recently. What, so what is, is there, this is a big team effort, right? Like I, I, I see that there's a number of names from your team, just essentially building projects and then collaborating on this this demo. Like maybe could just, could you describe like what it is andreessen's ACC sort, sort of involvement so far and like yeah.

[00:10:50] What, what, what is the scope of this? Yeah.

[00:10:53] Matt Bornstein: You know, we all applied for, you know L three engineer jobs and, and got turned down by all the, all the big tech firms. So we thought, hey, you know, we'll, we'll just do it our ourselves. Yeah. Look, I think, and this might be a little controversial, your average venture capitalist doesn't do any real work, and I completely include myself in this category, you know?

[00:11:14] Allocating resources to support teams is, is important. It's an important function in the economy, but it's, it's what you might call indirect work, which is you're supporting someone else doing something. You know, we just sort of made the decision when we really saw AI starting to take off that we should start doing real work too.

[00:11:31] And it's really just about supporting the ecosystem, especially around open source like Simon. We're massive believers that the innovation you see in open source is really gonna be a big unlock for AI based applications, right? Not everybody can just use. The Open AI API is good, as good as it is, and not everybody can train a model from scratch, right?

[00:11:52] Not everybody you know is, is Nome Shazi or, or someone like that. So so we think it's a really huge unlock and, and again, we're just trying to support as much as possible. So today we you know, we released a playground to play around with Llama2. We got it up on, on Replicate so people can just sort of try it with an API call and try integrating it into their apps.

[00:12:10] We released an AI starter kit over the last couple of weeks which people are actually using. We were shocked. We're, we're a little nervous cuz our, our code, you know, may or may not be production ready. But, but you'll see more and more of this from us over time.

[00:12:23] swyx: Yeah, I've seen your companion chat bot, and I have to say, it's actually pretty impressive.

[00:12:26] It's got all the, is it the latest features in terms, especially in terms of streaming and lag chain and all the other stuff. So kudos to your team on that. Just to round out the overviews or the, the high level takes, before we go into individual details Alessio has been compiling the show notes, which we were gonna publish when this podcast goes live on lane space.

[00:12:45] Lessio, maybe you want to go over some of the, the notes that you've been taking. Then I'll, I'll go over to Alex.

[00:12:50] Alessio's recap of Llama 2

[00:12:50] Nathan Lambert: Yeah, we

[00:12:50] Alessio Fanelli: got a, we got a lot of stuff to run through here. I think like the most interesting things that I read from the paper. One, there's a abandoned size model. So the 7 billion, 13 billion and 70 billion made it to release, but there's a 34 billion size that didn't make it.

[00:13:08] And in the safety chart, you can actually see it's like, Twice as unsafe, quote unquote. And they decided not to publish it because of lack of time to red team it. So I don't know if anybody had a chance to try the 34 B before the release, but I would love to learn, learn more about that. Outside of that, yeah, as Simon and Nathan were talking about, the data piece is a lot more obscure.

[00:13:31] So LAMA one was 67% common crop, 15% c4, a bunch of GitHub Vidia books as we mentioned. We don't have any information about LAMA two, but they did mention they have a 40% larger pre-training corpus. So they've obviously been investing a lot in that. Also, yeah, the, the supervised, fine tuning was very interesting.

[00:13:52] I saw a tweet, somebody asked the laou how to kill a process, and laou was like, you can't kill things. And I was like, just a process. It's not a person. So I think in, in some places, the, it might have gone too far with the R L H F but that's another, that's another interesting side, right? Like if this is the starting point and like the defacto standard for open source models, are we okay with, you know, not being able to ask how to kill a Linux process?

[00:14:18] But I'm not, I'm not sure about that

[00:14:20] Nathan Lambert: yet.

[00:14:21] Simon Willison: I ran into that myself. I, I asked it to give me all of the animal emoji and it said that that would be disrespectful if it, if it attempted to do that, which was kind of interesting.

[00:14:32] Alessio Fanelli: Exactly. So that's a, that's an open question on open, you know, it's the Joel safety question.

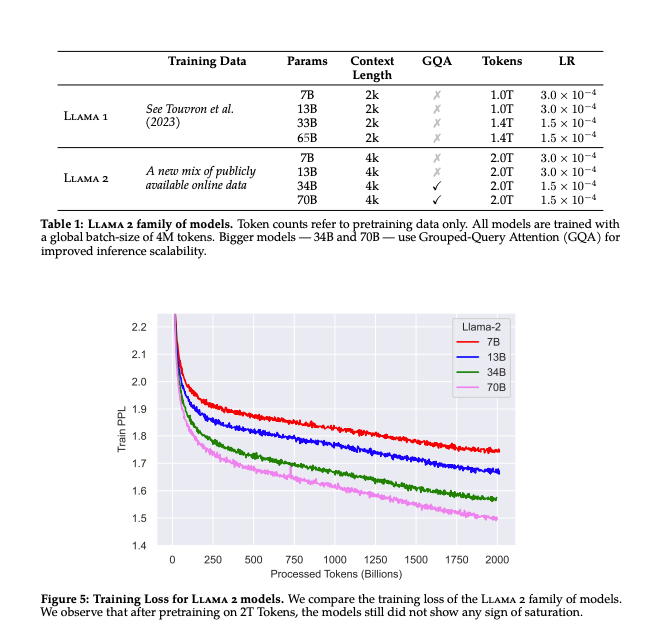

[00:14:39] It's like, how much do we need to do before we release the smartest to the public versus what should that. The public side. The other thing is like, they should have let this GPUs burn for more. Like if you look at the, at the loss graphs, like these models are not saturated, I guess. Like they spent a lot of, a lot of money to try and train these.

[00:14:56] Datasets 101 Followup

[00:14:56] Alessio Fanelli: But it seems like there's a lot of work left to do there. We just did a data sets 1 0 1 episode that we released yesterday, which is already old news because now LAMA two is out and this is all the rage. But we talked about some of the scaling laws and we thought the 200 x was like the new LAMA ratio.

[00:15:12] But I think this one is 275 x Sean, I think.

[00:15:17] swyx: Yeah. So that's five. Yeah, 2 trillion tokens for seven B model. And that's, you know, that's up from 1.2 last time. So they, they've definitely ramped up the, the, the amount of data and they, they just refuse to tell us any of it because, well, you know, guess what happened last time They, you know, they published the data, infra red pajama went and cloned you know, line for line exactly what was in the LAMA paper.

[00:15:39] So, you know, then that created, you know, red pa, red pajama model and then open lama as well.

[00:15:44] Context Length 4k

[00:15:44] Simon Willison: So I saw it says that the context length is up from the first lama. Do we know what the new context length is?

[00:15:50] Matt Bornstein: I think it's,

[00:15:50] Nathan Lambert: yeah, 4k. 4k.

[00:15:53] Simon Willison: Is that likely to be higher for the 70 B model or are they all the same context length?

[00:15:58] Matt Bornstein: I believe they're all the same and we have tested it a little bit and my intuition is that you can actually get more effective performance, more accuracy out of 4K rather than scaling up the way, say OpenAI have to 32 K or high. Like it's, I think it's just hard to find high quality. Training data. So it's when users actually start to submit longer inputs, performance kind of breaks down.

[00:16:22] And I'm not talking about open AI specifically, but in general, and that's, that's my intuition on why you know, why meta is keeping it relatively small for these models.

[00:16:31] Simon Willison: I'm kind of hoping that somebody, now that it's open source, somebody finds some clever trick to increase that. I've been playing with the Claude 100,000 a lot recently and it's pretty phenomenal what you can do once you've got that extra context length.

[00:16:43] swyx: There

[00:16:44] Alex Volkov: is actually a trick. It's called rope. We've seen this with a two, two line change that you can, you can make Lama forget about the context it was trained on, and there was back and forth about how effective this is and whether or not it suffers from the same dip, you know, in the middle of the context.

[00:16:59] But this rope scaling trick then was verified by folks from, I think Microsoft, independently from that guy Kaiko, Ken Devrel, and I, I see some folks in the audience here who are participating in this. So apparently this applies to the previous LAMA and would likely apply to this next one as well.

[00:17:17] Simon Willison: That's pretty exciting. I can't wait to, this is the thing I'm looking forward to is now that it open source. All of this stuff is go, these experiments are just gonna start happening at such, such, such a fast rate. This happened with Lamba before. You know, once you let every researcher in the world download and start tinkering with your model, people start finding optimizations and, and new tricks at a, at a crazy rate.

[00:17:37] It's gonna be really interesting.

[00:17:39] Nathan Lambert: So

[00:17:39] Alex Volkov: I think the interesting piece here is to see whether or not the commercial license will unlock even more, or did the researchers didn't care and kinda threw the kitchen sink of everything they wanted to hack together on the previous llama. I'm thinking because it's open source commercially now companies will actually start, you know, doubling down because there will be able to then use the fruits of their labor on commercial purposes.

[00:18:02] So we'll likely see

[00:18:04] Alessio Fanelli: more.

[00:18:05] Open-ish Source? Usage Policy and Restrictions

[00:18:05] Alessio Fanelli: I think you guys use the magic word, which is open source, and everybody has a, has a different, different definition. And I know we had Tom Warren in the audience who asked the question about this. So Tom, I'm gonna invite you up to speak if you're around.

[00:18:18] Simon Willison: Yeah. I'm gonna say, call it, I, I say openly licensed, not open source, because I feel like open source has a definition, this doesn't quite apply here.

[00:18:27] Alessio Fanelli: Yeah, yeah, exactly. If you go, actually on my website, I wrote like a 10,000 words thing on like the history of open source licensing, and there's things that are open source, things that are somewhat open source in traditional infra, that's like the server side public license. Some of these things that like Elastic and Mongo came up with to avoid the a w s a p i compatible in quotes products that were literally just the same thing.

[00:18:51] So yeah, it's, it's really curious also that the breakpoint for the LAMA license is 700 million monthly active users, which is. A lot of users obviously, but there's some notable people that go over it. So Snapchat is one company that is obviously a, a close competitor to, to meta TikTok, isn't there?

[00:19:10] YouTube, by far exceeds that

[00:19:13] Simon Willison: amount. Yeah. It's worth noting, but that's actually, that's not a rule going forward as of the date of the release. If you have 700 milli monthly users, you can't, you, you have to get an extra license from, from Meta. If you manage to achieve 700 million million monthly extras next week, you could still use it.

[00:19:30] Like it's, it's, it's, it's that point in time that

[00:19:32] swyx: matters. Yeah, at that point they should just name people. But yeah. Just to close the loop on this open source element, you know, there's one other piece of about the open source or, or the usage policy, which is you can't use it to train any other model.

[00:19:44] Thou shalt not have any other models before llama. Llama is your only model that you can fine tune with, with llama data.

[00:19:52] Simon Willison: I think it's more than that. This is they're protecting against distilling the model, right? The thing that everyone's been doing, like Una was trained on Chachi PT data, despite open AI having a thing in their terms, it says you can't train a competing model.

[00:20:04] I don't, I'm really frustrated by this because the, the language says you cannot train a competing large language model. But what does that even mean? Who gets to decide what a large language model is? If in six months time we invent a new architecture is that's still an l l M that's covered under those terms.

[00:20:20] It's, it's frustratingly vague.

[00:20:22] Nathan Lambert: Yeah, these clauses are kind of bogus. We talk about them a lot of hugging base. And it seems also from a legal perspective, the things that they're grounded in, like terms of service are being walked back in kind of this digital domain. And then also it's just like unclear what is actually using the language model.

[00:20:40] So all these things where people use language models as a judge, or you can just generate a bunch of interesting prompts to then modify them. It's so ridiculous to even think of trying to enforce these clauses. It's surprising to see it show up,

[00:20:54] swyx: which you have to note, like in the LAMA two paper itself, they also use other company models to do their evaluations.

[00:21:02] Right? Like so and I, and you know, a strict reading of the, of those clauses would not allow them from from that.

[00:21:08] Huggingface Responsible AI License

[00:21:08] swyx: Nathan, actually a quick follow up. Hugging face has its own license, the rail license. I think there was some iteration following this stable diffusion release. Would you, would that be appropriate for something like Alama two?

[00:21:19] Nathan Lambert: Yeah, I think it's good. I don't have a hundred percent knowledge of rail. My understanding is that it's like, generally the goal is to be like commercially available with good intention and then there's kind of like, it starts to try to give leverage for people to come after bad actors using their models.

[00:21:37] I, I think the commercial use of this is gonna be off the charts very soon, like at hugging face. A lot of the monetization efforts are around like trying to enable commercial use of open source language models. And the license questions have been a constant discussion for the last six months from things we're trying to build and from customers.

[00:21:57] So like this is definitely going to

[00:21:59] swyx: be used. Yeah. Yeah. Okay. So I don't, it's, it's do we have, we have a lot of you know, insightful people here.

[00:22:07] I feel like the, the best way to organize this space is maybe to just kind of try to stick to as, as many sort of factual elements as we, as we can.

[00:22:15] I feel like Nathan, since you've done the most work you've had the most time with the paper, to be honest. What El maybe sort of pick on one other sort of element of, of the paper that you, that you find worth discussing and we can kind of go into that.

[00:22:27] Pretraining Llama 2 Base Model beyond Chinchilla

[00:22:27] swyx: Maybe the, sort of the, the pre-training base model stuff.

[00:22:30] Nathan Lambert: Like, I, I don't think there's a lot on the pre-training. The, there's definitely an important thing that makes it able to be used, which is they use, like, what is cqa? It's like cross query attention, which will make inference on the bigger models faster. I think there's kind of a asterisk that is interesting on that code and math and reasoning seems pretty.

[00:22:49] Not emphasized in the paper, and that's what their kind of like market for. That's what ChatGPT is used by a lot of people on this call for. I think at a technical level, the Rh f details are the most fleshed out that we have seen. Sure. And kind of confirm a lot of the capabilities we've seen insinuated by anthropic and open ai.

[00:23:11] So that was like kind of a relief for me as someone that's trying to be like, I still think this really works. And they dropped this paper is like, we really like this, which was not guaranteed. I, I have one

[00:23:22] Matt Bornstein: pre-training question. And this is for you, Nathan, or, or for the whole group. Like we, we talked about it before.

[00:23:27] The, the amount of pre-training data here goes far beyond chinchilla optimal and the loss curves were still going down when they cut it off. Like, are we ready to say that chinchilla optimal is just not optimal anymore?

[00:23:43] Nathan Lambert: Oh, I'm ready. I never really cared about it. Like I think data quality is changing that completely.

[00:23:51] It's like, I think when Gent came out, data quality standards were so different and given what the practices are now, I, it's like, what does it mean?

[00:24:03] Matt Bornstein: It was a really big deal at the time though, right? I mean, it was kind of this breathtaking result that if you just ramp up training data much higher than you thought or people had been doing, you just kept getting better performance.

[00:24:15] May maybe Nathan, since you're, you know, the most knowledgeable on this space, like can you just like, give us a little intuition, like when you say better data quality, like what exactly is happening under the hood that makes this possible now?

[00:24:26] Nathan Lambert: Oh, they're removing. Okay. Think about all the tweets and texts that everyone sends, and we have these weird insider jokes and phrasings that we do.

[00:24:37] They make no sense if you read them and your language model, like half reproduces them. So like, and like I'll say like you got got, or something that is just very confusing from like a token prediction state point of view, and then also a ton of just errors. It's like I write a blog post. I used to not take it as seriously, I've like published a blog with a half finished sentence in it.

[00:25:00] It's like they would just scrape that and take it, but trying to actually get data that is complete is, is consistent, is just extremely hard. I think technical terms are like deduplication, so you don't wanna pass the model, the same text, even if it came from different websites and there's tons more that goes into this.

[00:25:21] I, I don't think it's the area of my most expertise, but I think it's actually pretty simple. You just wanna put good text into the model and understanding what good text is on the internet is really hard.

[00:25:34] Matt Bornstein: So you're sort of saying the reason people were using not enough data initially is cuz they just weren't good enough at cleaning it. And now that those methods have advanced so much, we're moving duplicates better, we can measure quality better, all of that. Like, like do you think we're gonna keep going up, I guess is the question like this, you know, they trained a seven B model on 2 trillion tokens.

[00:25:52] Like, do you think that's like the Maxim or are we gonna keep going?

[00:25:55] Nathan Lambert: I kind of like, I, I think the intuition on like what you're saying is how getting more higher quality data is making it so using more works better. I like, that's what everyone in my circles is saying is the trend and given machine learning in the last few years, I think trends tend to be stickier than most people expect them to be.

[00:26:17] So I would expect it to keep going. I just kind of trust the process to continue for a lot of stuff like this.

[00:26:22] swyx: Yeah. So we on our podcast, we've been asking everyone that we can possibly CAGR ask about, you know, went from two x tokens to perran ratio with Kaplan, and then 20 x with chinch, now 200 x with llama, like someone's gonna try 2000.

[00:26:37] Right? We did have a response today from one of our previous guests Varun of Codium who said that they did try a thousand to one tokens, to params ratio. And it definitely gone into the range of overfitting. So your loss can continue to go down, but you're not sort of measuring overfitting in, in, in, in some of that respect.

[00:26:53] So it's, it's very unclear. I would say though, you know, I, I do have visual sources like. Chin. It's not that chinch was wrong. Chinch was optimizing for a particular set of assumptions, particularly the pre-training compute budget, right? Compute optimal sort of scaling laws. And if you look at the llama paper right on the first page, I have it open right in front of me.

[00:27:12] They actually criticize that and say like, you know, this, this disregards the inference budget which is critical when you're actually serving the model instead of just optimizing for a pre-training compute objective. And as things move from research into production, inference starts to become more, more of a concern.

[00:27:28] Resource constraints starts becoming more of, more of a concern. And so I, I, I think it's actually quite reasonable to move on from chinchilla, which is a very important result. And, and say that, you know, we are, we are exploring very different objectives as compared to, you know, more than a year ago when Chinchilla was published.

[00:27:45] Llama 2 is incomplete? Race to publish

[00:27:45] Nathan Lambert: Yeah, I agree. I was just gonna say that I feel like the was going down like all of these fa reading the paper, it feels like this is a checkpoint of a much longer term project. They like readily list off things that they didn't get to but they want to continue and like capabilities or something.

[00:28:03] Some of the methods seem like kind of hacks to make things work that they didn't know if didn't get to work. Like Anthropic came up with context distillation, which is a way of getting a really, the behavior of a really long system prompt into a shorter prompt essentially like, and, and they did something like this in this paper to get the P model to behave like characters for longer conversation turns.

[00:28:27] And like, there's all sorts of little things that I just think meta is going to continue this and.

[00:28:34] Simon Willison: So that's kinda fascinating cuz that that implies that the, the actual story here, it's the AI arms race, right? It's, it's, it's Zuckerberg saying, no, we need to get something out right now. Get it to a point where it's good enough and safe enough and then let's ship it.

[00:28:46] And it's not so much that they, they, they didn't necessarily have time to get to the sort of perfect point that they wanted to get to.

[00:28:54] swyx: Yeah, that is the I have asked people about this offline, and so I was like, okay, so why don't people throw a lot more compute at this? And they're like, you know, as long as you have a state-of-the-art model, you should just ship it and get credit and then wait till, like, wait a few months and then get the next version out.

[00:29:08] That way you have a lot more shots on gold.

[00:29:11] Simon Willison: That totally makes sense. Yeah.

[00:29:14] swyx: And I was like, oh, okay. Like we are in such early stages that honestly, I mean, they spent 3 million G p U hours on this thing. They could spend 30 million in, like, obviously it would be way better. Like we're in such early stages that even these relatively simple.

[00:29:27] Like don't forget Lama one was published in February of this year. We're in such a easy cycle where it, it's, it's still within, you know, the order of months to make and improve one of these things. That it's not too terrible.

[00:29:40] Come for the Llama, stay for the (Meta) drama

[00:29:40] swyx: I do, I guess I should also mention a shout out that Not every person who worked on LAMA two is on the paper.

[00:29:48] Guerro Lampel and who's, who's one of the co-founders of Misra, the French startup that raised like a hundred million C round. Apparently worked on LAMA two and they left him out because in, they left his team out because they left Meta before this paper was published. So interesting passage.

[00:30:03] Treat there. If anyone wants to go through that,

[00:30:05] Alessio Fanelli: come for Alama, stay for the drama. Oh, it's hard. It's hard to read, you know, into like the, as you know, especially when it comes to like, work that then goes over source. It's always we did the work. We didn't I don't know, since, since nobody here worked at Meta I would rather not go, not go down that path.

[00:30:23] Yeah,

[00:30:23] swyx: I, I'll just leave a bookmark there. Okay. Yeah, but exactly.

[00:30:26] Nathan Lambert: We're not in the room there. I,

[00:30:28] Matt Bornstein: I, I'm for one shocked to hear that there may be drama among researchers. I've, I've never heard of that happening before.

[00:30:34] Nathan Lambert: Right. Near, especially after three organizational restructures of researchers hopping, playing hopscotch from one org to another, and being in between, in between jobs.

[00:30:43] I don't know.

[00:30:45] swyx: All right. Alex, do you have your hand up? And then I wanted to dig more on the the preference data that Nathan mentioned. Mm-hmm.

[00:30:52] Language Translation

[00:30:52] Alex Volkov: Hey guys. Just to introduce myself real quick, I'm Alex. We participant in the spaces is, and my angle and the way I vibe, quote unquote vibe check models is via languages.

[00:31:03] And to me, it was really surprising that they released kind of the second iteration while also knowing how much meta actually does for translation. They have very famous N L L B models, no language left behind. They released the world models that you can speak in multiple, like a thousand languages that understands, and for some reason, they're open source models.

[00:31:23] They are not very strong multilingually. So we've seen this with GPT4, which was way better at multilingual speak. Claude highlighted this point with Claude two that is like way better at the blue score. I think for, for languages, and I've tried and my go-to like vibe check with these models is to, with the, especially the open source one is the ability to translate, the ability to understand the languages.

[00:31:46] I've tried it with, with Hebrew a little bit. I've tried with. Very, very impressed. Now, obviously fine tuning will come and obviously people will fine tune these, these models towards different outcomes, but it's very interesting considering how much meta does elsewhere for languages and to bring the world together.

[00:32:02] How much kind of this model did not focus on this, this specific kind of issue. And the, the, the second thing is also code. I know you guys talked about human eval. That's fairly low in terms of the score out of the box. And obviously fine tuning will, will, will make it better, but fairly, fairly disappointing score on, on human ev, right?

[00:32:22] Fairly low coding abilities. And we've seen previously that there's some assumption that training on more code in your dataset actually gives you better kinda logic and reasoning abilities. So kind of surprised that that was fairly low. We went to chairman with these two, two examples about Lama.

[00:32:40] Llama2's coding abilities

[00:32:40] swyx: I'll say on the human eval piece don't count it, not just yet. So I've, I've had some dms with Quinn Slack or of source graph, and he's is you know, very actively building Cody their, their coding assistant bot. And it's well known that human eval is not a very good or reflective measure of how we use coding chatbots.

[00:32:59] And so like, it, it is probably human EV emails is probably overrepresented in terms of being, being like this effectively the sole benchmark by which we value code models. We, we just need new benchmarks for code.

[00:33:11] Matt Bornstein: I do think it's possible better instruction tuning will improve code performance of the LAMA two models as well, because their reasoning capabilities are actually relatively good. Not perfect, but relatively good, which makes me think there may be more code in the pre-training than it seems.

[00:33:26] swyx: Well it's difficult to know cuz they don't talk.

[00:33:29] We'll, we'll see, we'll see.

[00:33:31] Why we want to know about the training data

[00:33:31] Simon Willison: I mean, this is the thing that's so infuriating about these opaque models that don't talk about their training data is as users of the models, we need to know, we need to know how much, like if it's had code in it, all of those kinds of things in order to make decisions about what we're going to use it for.

[00:33:45] So I kind of feel like you know, the, the, the secrecy around these models really hurts me as a consumer of these models, just from a practical point of view of being able to make good judgements about what the model's gonna like to be able to do.

[00:33:55] Matt Bornstein: I, I do think that's true, Simon. You know, I wanna make just one defensive of Meadow, which is like, this is pretty amazing what they've released and they've, you know, given to the world, obviously it may benefit them commercially as well, but you know, it actually carries pretty substantial risks for them and actually think it's kind of a courageous act to, to release and, you know, so it, and it's the things like the training data.

[00:34:20] Safety that like really, you know, when you're, when you're meta and you have billions of, of active users, like you, you actually are taking a pretty big risk with these things. And, you know, regulatory bodies have their sights on you. So I, I do think you're right. I, I just, I, you know, for what it's worth, wanna I agree with, I agree with, it's actually a

[00:34:37] Simon Willison: positive thing.

[00:34:38] I agree with everything you say, but at the same time, right now, I've got a whole bunch of models that I'm choosing to be to, to, that I'm trying to choose between, and I don't have the information I need to make the decision. I feel like at some point it's going to be a competitive advantage to put out a model with transparency of the data over, over what went into the data.

[00:34:55] Cause people will be able to use that model more effectively. But yeah, I completely understand these strategic challenges that I'm, I'm astonished that meta went ahead with this release. I never thought they'd, they'd take the risk of releasing something like this and someone use it for something bad and now they're on the front page, all of the, all of the papers for it.

[00:35:12] So yeah, I'm, I'm super excited about it on that front. I wanna

[00:35:15] The importance of Meta pushing forward Truly Open AI

[00:35:15] Alex Volkov: ajo. Yeah. I know from the perspective of releasing something as open source as they did previously we didn't have commercial licensing, obviously. Now the big thing is we have commercial licensing, but the amount of people, I don't know if you guys noticed, but like the amount of people who signed, quote unquote in support of releasing these models, Paul Graham and Mark Andreesen, and like a bunch of other folks, like in addition to the model, they also released kind of a counterweight to the moratorium papers and all the AI safety stuff.

[00:35:41] Because there was a, an FTC pro, right? There was like some, some regulatory stuff talking about the previous releases of LAMA from, from a long time ago. And now not only they released like the, the, the, the quote unquote open source. So unless it doesn't, doesn't kick me off here. Not fully open source, but definitely we're able to use this commercially.

[00:36:00] But they also released kind of a industry leaders selling like the, the, the open source is needed. And I think that. That, like, gives a very strong counterweight to the M and the keep, keep it closed and don't release kind of thing. We saw, and it's very interesting. It comes from meta specifically.

[00:36:16] So in addition to the courageousness that they did, it looks like they're also kind of leading the industry in terms of like, this is how to do fully commercial again, quote unquote open source, not open source license, but this is how to release models in a, in a, in a safe way. So definitely joining the, the courage and the applauds for meta and the team.

[00:36:35] Nathan Lambert: Yeah, I just don't think that like, like the cu we're not the customers of meta with respect to this model. I think they're trying to build these for their own purposes and then they have very strong, like, I think it's kind of the principles of like transparency and research that these organizations at Meta have stood by. And I think that's like the newest representation of it, more than like, and I don't think they're trying to make money off releasing this in any way. Like there is an ecosystem perspective of like where AI content proliferates, there's more creativity for their users and that enables social media and things.

[00:37:08] But I think we're still pretty far from that. And it's more of like a values and internal research and development tool for themselves. Like is there a way for them to make money directly off of this NPCs

[00:37:19] Alessio Fanelli: and the Metaverse. But I mean, I don't know.

[00:37:23] swyx: Well, so we, we, we last hosted one of these emergency pods, I think maybe two, two pods ago.

[00:37:28] Which was I think in May where we did our when the No Moats memo came out from Google. And we actually talked a little bit about what an ecosystem around a language model looks like when you have stackable loras customizing and fine tunes that are based on top of an existing base model that is well known.

[00:37:48] I, I think that might be part of the strategy there. You know Facebook is also well known for releasing, I guess, PyTorch and, and React. And, and those are very well, like, they don't make money from that directly, but they definitely do benefit from the ecosystem that has sprung around it, that, that essentially represents a lot of free development from, from the open source community.

[00:38:07] Simon Willison: I think there's a lot to be said. The fact that meta AI are at the very heart of openly licensed language model research, and that's because of Lama, you know, Lama came out and it kicked off this immense tidal wave of interest and of activity with meta ai right at the very center of that. And in the world that we live in right now, being at the very center of all of the research and innovation happening around language models feels like a really valuable place to be.

[00:38:31] Llama 2 as Enabler of Startups

[00:38:31] swyx: Yeah, it, it, it really is. I I, and maybe we can go to a little bit to, to Matt again. One thing I wanted to get your thoughts on that, you know, I don't know how long you have with, with us, but is the impact on the startup ecosystem, right? Like how, how big of an enabler is this? Or does this, I guess just commoditize everything to a point where, you know, everyone's just rappers.

[00:38:50] Matt Bornstein: I think it's a really, really massive deal. You know, we've met with. Conservatively hundreds of AI startups now maybe, maybe thousands. We'd have to go back and look and, and, and I sort of alluded to this before, but the really big dilemma is do I train my own model or do I just use something off the shelf?

[00:39:15] And we're really, we're increasingly seeing that the answer for almost everybody is kind of a hybrid approach. We're seeing increasing number of startups, basically triage. Their AI workloads where if things require, you know, really high levels of accuracy and you know, human like text generation, GBT four is the only answer.

[00:39:38] But many queries or workloads actually don't require that, right? So you can kind of scale down and say, you know, for a really simple query, I can use, you know, an open source model off the shelf for something in the middle. I can fine tune for various tasks and then you can get pretty sophisticated about what you route, where all of that is only possible if we have commercially usable, really high quality language models and especially ones that have been efficiently trained such that latency is, is, is low and cost is relatively low.

[00:40:09] So I think what we're gonna see happen is there's gonna be a, a big push for startups to use. Lama two models and, and other open source models that have similar levels of performance. Fine tune it in ways that actually work for specific tasks, right? Not for specific data, like I think that was sort of a head fake, but for, for specific tasks and, and really be able to build more defensible businesses that way.

[00:40:34] You know, this, there's nothing wrong with using OpenAI. That's fantastic, but it's probably not good to make that a hundred percent of your business. And, and a lot of founders are doing that now. So, so that's why I think this is, this is such a huge deal and, you know, the, the progress just today has been amazing.

[00:40:51] Like, there's gonna be, by the end of today a number of hosts where you can just easily use The Lama two models, like right outta the box, you know, replicates one that we work with, but there there are others as well. You know, you can already run it on your local computer with two bit precision, which is kind of crazy if you stop and think about that for a second, that with two bits you can actually run a super advanced language model on your own computer.

[00:41:15] So I, I think I, I just think this is a huge, huge deal for startups and I think if you're a startup founder working in ai, you know, you, you really should be taking a look at, at open source models now and seeing how they, how they can be used to, to kind of deepen your moat and, and, you know, build a really great AI product.

[00:41:34] Where you can try Llama 2

[00:41:34] swyx: Right. So me, I would like to help fill in the blanks. So apart from Replicate, it looks like hugging Face has also launched an inference endpoint for that. And as far as I know, it's one of the only few ways to try the 70 B model off the shelf. I think Base 10 has also maybe put something up. And then for the, for the two bit quantized model, you can look at the G GML ecosystem.

[00:41:55] Do you need dataset transparency if you have evals?

[00:41:55] swyx: Yeah. And, and then I also wanted to recognize one of the other respondents in our chat, we have a little, little comment window here. ARD Doshi was responding, I think, to Simon. And, and I, I did actually have a pushback, right? Like, we don't have to know. The full data sets of of Lama as long as we are able to eval for everything that we want to know about.

[00:42:13] I think we actually have to live with AI becoming more and more of a black box. Even though the, the mo the the weights are open I mean for me it

... [Content truncated due to size limits]