AI News for 2/3/2026-2/4/2026. We checked 12 subreddits, 544 Twitters and 24 Discords (254 channels, and 10187 messages) for you. Estimated reading time saved (at 200wpm): 795 minutes. AINews’ website lets you search all past issues. As a reminder, AINews is now a section of Latent Space. You can opt in/out of email frequencies!

It is our policy to give the title story to AI companies that cross into decacorn status, to celebrate their rarity and look back at their growth, but it seems that it is less rare these days… today not only did Sequoia, a16z and ICONIQ lead the Eleven@11 round (WSJ), but it was promptly upstaged by Cerebras which, after their 750MW OpenAI deal (valued at $10B over 3 years), had a DOUBLE decacorn round at $1B at $23B from Tiger Global… only 5 months after they were valued at $8B.

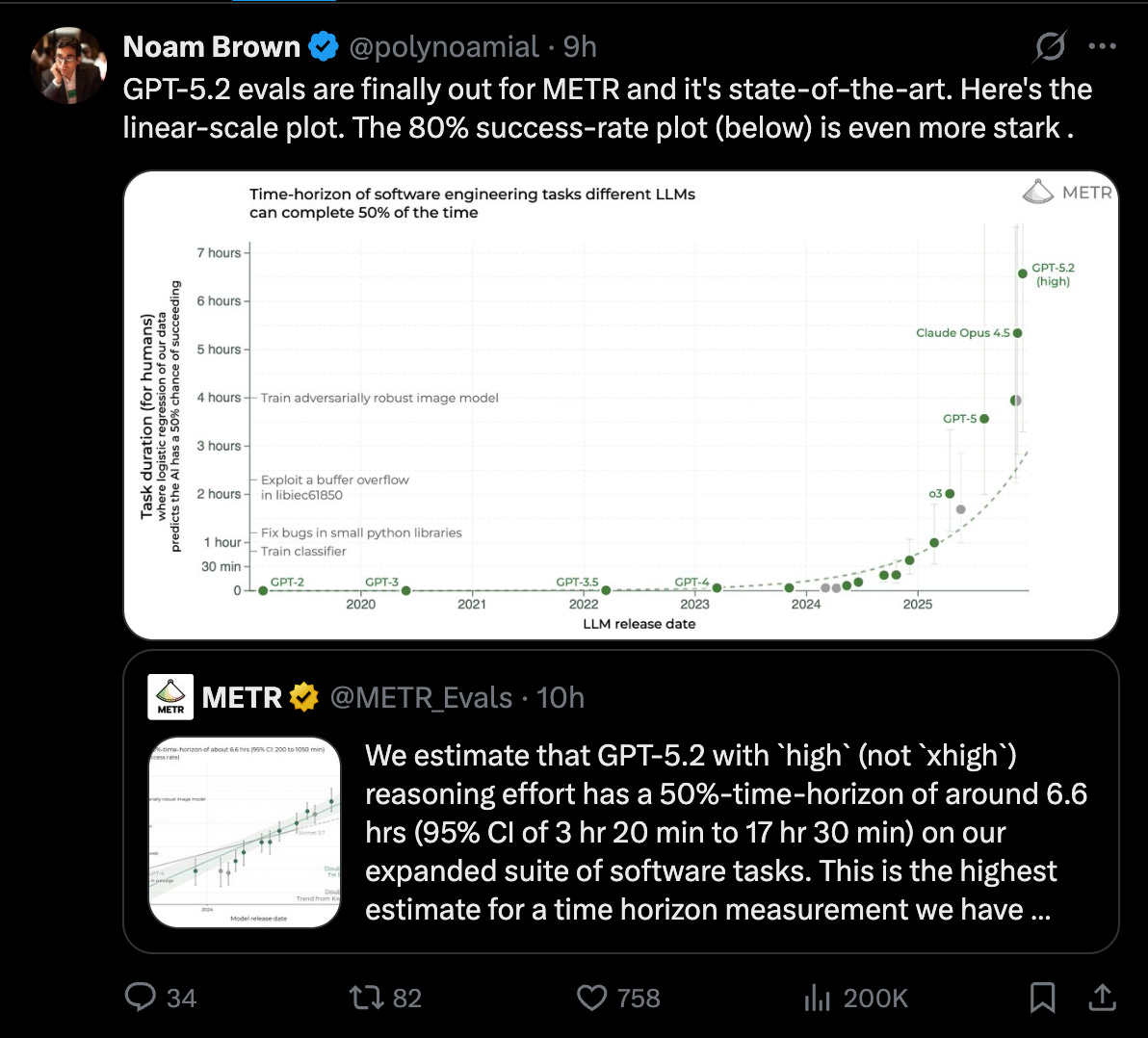

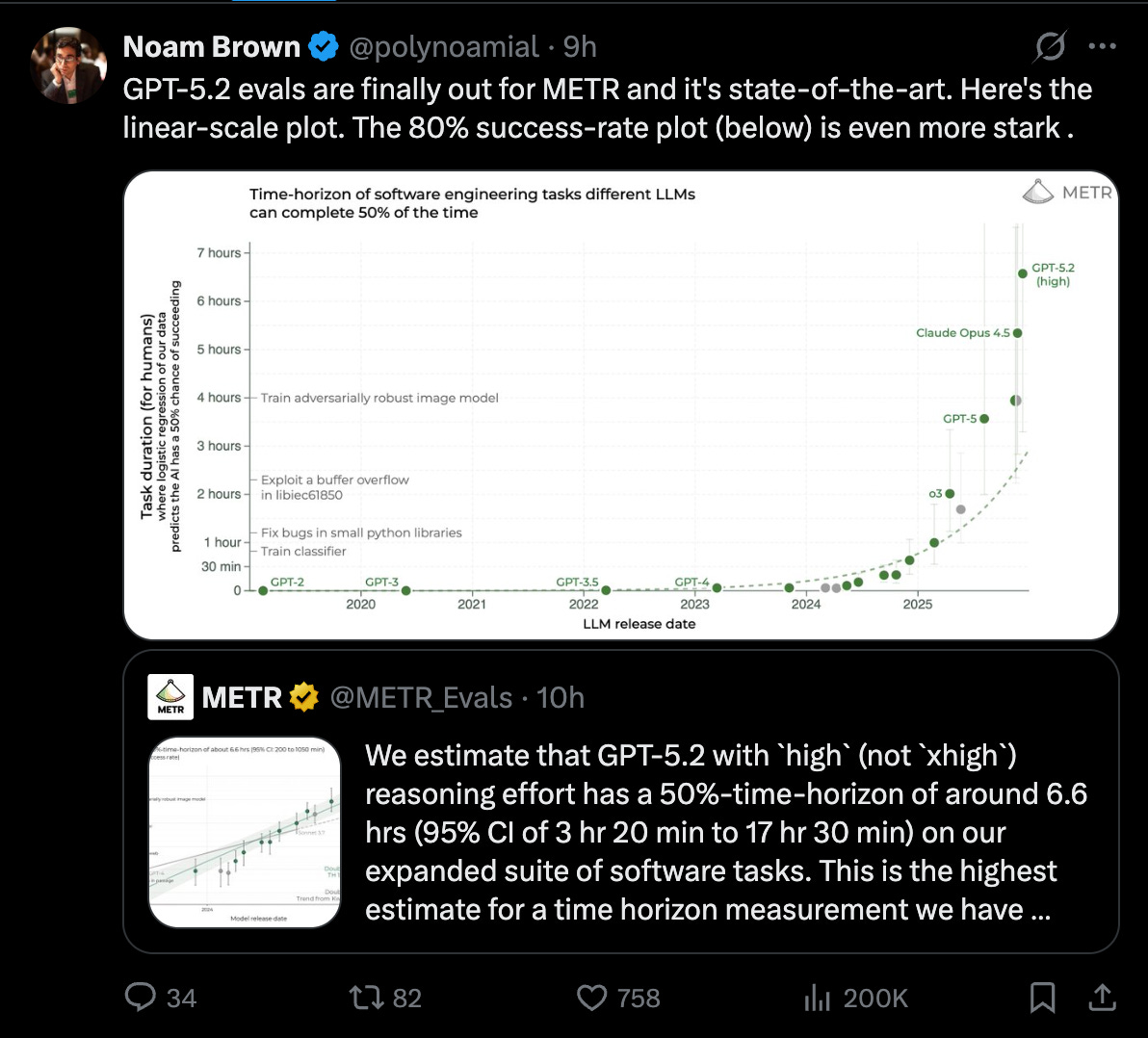

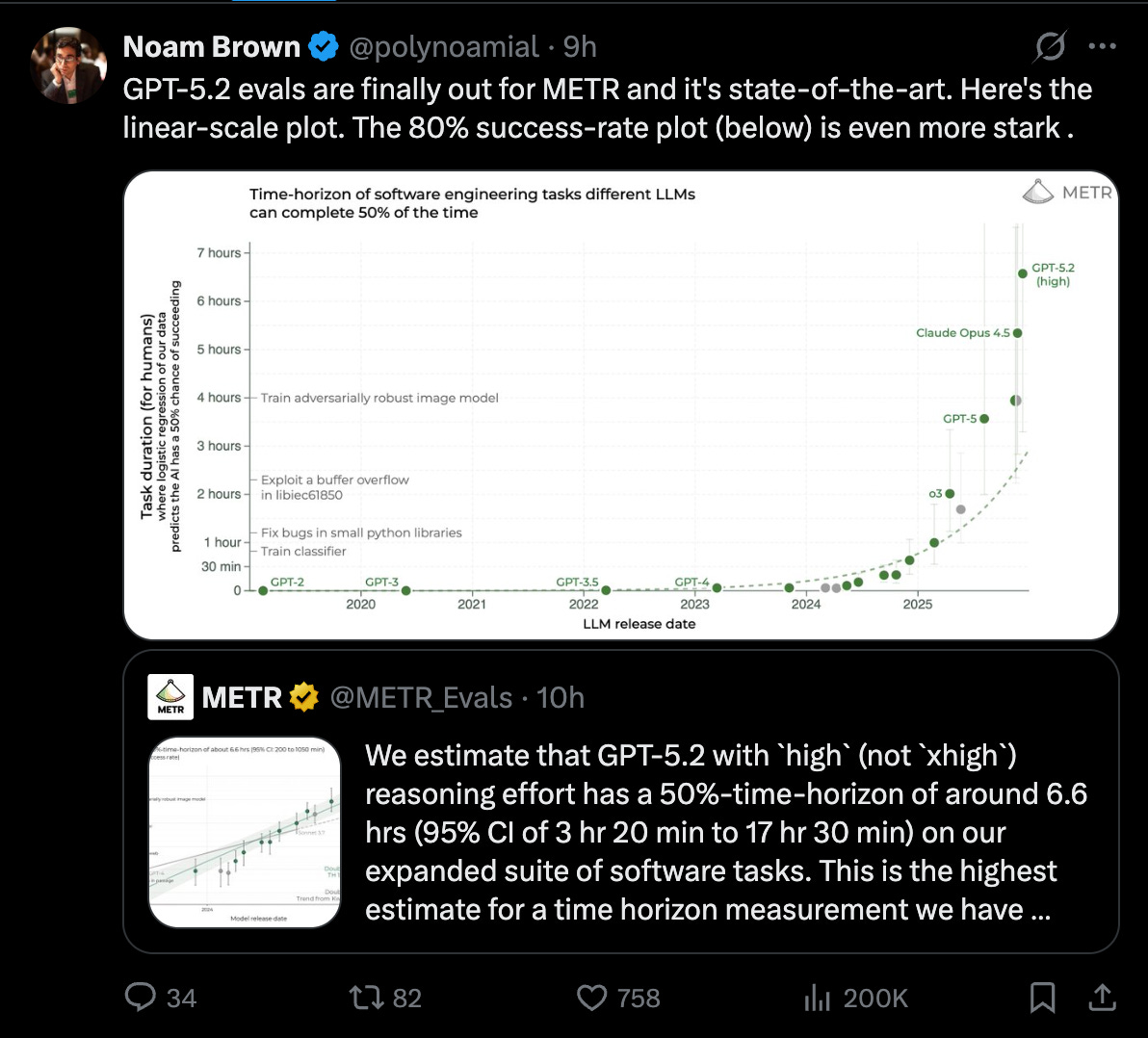

It’s also the 1 year anniversary of Vibe Coding, and Andrej has nominated Agentic Engineering as the new meta of the year, even as METR anoints GPT 5.2 High as the new 6.6 hour human task model, beating Opus 4.5, and sama announces 1m MAU of Codex.

AI Twitter Recap

Big Tech productization: Gemini 3 everywhere (Chrome, app scale, “game” evals)

- Chrome side panel on Gemini 3: Google is now shipping a new Chrome side panel experience “running on Gemini 3,” plus Nano Banana integration (Google’s phrasing) and other UX changes, signaling continued tight coupling of browser workflow + LLM features (Google).

- Gemini scale + cost curve: Google executives and analysts emphasized rapid Gemini adoption and big serving-cost reductions: Sundar reports Gemini 3 adoption “faster than any other model” and Alphabet crossing $400B annual revenue (@sundarpichai), while another clip cites 78% unit-cost reduction for Gemini serving across 2025 (financialjuice). A separate datapoint claims the Gemini app hit 750M+ MAU in Q4 2025 (OfficialLoganK); commentary notes this puts Gemini within striking distance of publicly reported ChatGPT MAU (Yuchenj_UW).

- Benchmarking via games: Google is pushing “soft skills” evaluation by letting models compete in games (Poker/Werewolf/Chess) through the Kaggle Game Arena, framed as testing planning/communication/decision-making under uncertainty before deployment (Google, Google, Google). This sits alongside broader industry moves to replace saturated benchmarks with more “economically useful work” measures (see Artificial Analysis update summarized by DeepLearningAI, below).

Coding agents converge in the IDE: VS Code “Agent Sessions”, GitHub Copilot Agents, Codex + Claude inside your workflow

- VS Code’s agent pivot: VS Code shipped a major update positioning itself as “home for coding agents,” including a unified Agent Sessions workspace for local/background/cloud agents, Claude + Codex support, parallel subagents, and an integrated browser (VS Code; pierceboggan). Insiders builds add Hooks, skills as slash commands, Claude.md support, and request queueing (pierceboggan).

- GitHub Copilot adds model/agent choice: GitHub announced you can use Claude and OpenAI Codex agents within GitHub/VS Code via Copilot Pro+/Enterprise, selecting an agent by intent and letting it clear backlogs async in existing workflows (GitHub; kdaigle). Anecdotally, engineers highlight the “remote async agent” workflow as the real unlock vs purely interactive chat coding (intellectronica).

- Codex distribution + harness details: OpenAI and OpenAI DevRel pushed adoption stats (500K downloads early; later 1M+ active users) and expanded surfaces (App/CLI/web/IDE integrations) backed by a shared “Codex harness” exposed via a JSON-RPC “Codex App Server” protocol (OpenAI, @sama, OpenAIDevs).

- Friction points remain: Some users report Codex running in CPU-only sandboxes / not seeing GPUs (and request GPU support) (Yuchenj_UW, tunguz), while OpenAI DevRel pushes back that GPU processes work and asks for repros (reach_vb).

- OpenClaw/agent communities become “platforms”: OpenClaw meetups (ClawCon) and ecosystem tooling (e.g., ClawHub, CLI updates) show how quickly coding-agent communities are professionalizing around workflows, security, and distribution (forkbombETH, swyx).

Agent architecture & observability: “skills”, subagents, MCP Apps, and why tracing replaces stack traces

- deepagents: skills + subagents, durable execution: LangChain’s deepagents shipped support for adding skills to subagents, standardizing on

.agents/skills, and improving thread resuming/UX (multiple release notes across maintainers) (sydneyrunkle, LangChain_OSS, masondrxy). The positioning: keep the main context clean via context isolation (subagents) plus agent specialization (skills) rather than choosing one (Vtrivedy10). - MCP evolves into “apps”: OpenAI Devs announced ChatGPT now has full support for MCP Apps, aligning with an MCP Apps spec derived from the ChatGPT Apps SDK—aimed at making “apps that adhere to the spec” portable into ChatGPT (OpenAIDevs).

- Skills vs MCP: different layers: A useful conceptual split: MCP tools extend runtime capabilities via external connections, while “skills” encode domain procedure/knowledge locally to shape reasoning (not just data access) (tuanacelik).

- Observability becomes evaluation: LangChain repeatedly emphasized that agent failures are “reasoning failures” across long tool-call traces, so debugging shifts from stack traces to trace-driven evaluation and regression testing (LangChain). Case studies push the same theme: ServiceNow orchestrating specialized agents across 8+ lifecycle stages with supervisor architectures, plus Monte Carlo launching “hundreds of sub-agents” for parallel investigations (LangChain, LangChain).

Models, benchmarks, and systems: METR time horizons, Perplexity DRACO, vLLM on GB200, and open scientific MoEs

- METR “time horizon” jumps for GPT-5.2 (with controversy around runtime reporting): METR reported GPT-5.2 (high reasoning effort) at a ~6.6 hour 50%-time-horizon on an expanded software-task suite, with wide CIs (3h20m–17h30m) (METR_Evals). Discourse fixated on “working time” vs capability: claims that GPT-5.2 took 26× longer than Opus circulated (scaling01), then METR-related clarification suggested a bug counting queue time and scaffold differences (token budgets, scaffolding choice) skewed the working_time metric (vvvincent_c). Net: the headline capability signal (longer-horizon success) seems real, but wall-clock comparisons were noisy and partially broken.

- Perplexity Deep Research + DRACO: Perplexity rolled out an “Advanced” Deep Research claiming SOTA on external benchmarks and strong performance across decision-heavy verticals; they also released DRACO as an open-source benchmark with rubrics/methodology and a Hugging Face dataset (perplexity_ai, AravSrinivas, perplexity_ai).

- vLLM performance on NVIDIA GB200: vLLM reported 26.2K prefill TPGS and 10.1K decode TPGS for DeepSeek R1/V3, claiming 3–5× throughput vs H200 with half the GPUs, enabled by NVFP4/FP8 GEMMs, kernel fusion, and weight offloading with async prefetch (vllm_project). vLLM also added “day-0” support for Mistral’s streaming ASR model and introduced a Realtime API endpoint (

/v1/realtime) (vllm_project). - Open scientific MoE arms race: Shanghai AI Lab’s Intern-S1-Pro was described as a 1T-parameter MoE with 512 experts (22B active) and architectural details like Fourier Position Encoding and MoE routing variants (bycloudai). Separate commentary suggests “very high sparsity” (hundreds of experts) is becoming standard in some ecosystems (teortaxesTex).

- Benchmark refresh: Artificial Analysis: Artificial Analysis released Intelligence Index v4.0, swapping saturated tests for benchmarks emphasizing “economically useful work,” factual reliability, and reasoning; GPT-5.2 leads a tight pack per their reshuffle (summary via DeepLearningAI) (DeepLearningAI).

Multimodal generation: video-with-audio arenas, Grok Imagine’s climb, Kling 3.0, and Qwen image editing

- Video evals get more granular: Artificial Analysis launched a Video with Audio Arena to separately benchmark models that natively generate audio (Veo 3.1, Grok Imagine, Sora 2, Kling) vs video-only capabilities (ArtificialAnlys).

- Grok Imagine momentum: Multiple signals point to Grok Imagine’s strong standing in public arenas, including Elon claiming “rank 1” (elonmusk) and Arena reporting Grok-Imagine-Video-720p taking #1 on image-to-video, “5× cheaper” than Veo 3.1 per their framing (arena).

- Kling 3.0 shipping iteration: Kling 3.0 is highlighted for custom multishot control (prompt per-shot for up to ~15s) and improved detail/character refs/native audio (jerrod_lew).

- Qwen image editing tooling: A Hugging Face app demonstrates multi-angle “3D lighting control” for image editing with discrete horizontal/elevation positions via an adapter approach (prithivMLmods).

Research notes: reasoning & generalization, continual learning, and robotics/world models

-

How LLMs reason (PhD thesis): Laura Ruis published her thesis on whether LLMs generalize beyond training data; her stated takeaway: LLMs can generalize in “interesting ways,” suggesting genuine reasoning rather than pure memorization (LauraRuis).

-

Continual learning as a theme: Databricks’ MemAlign frames agent memory as continual-learning machinery for building better LLM judges from human ratings, integrated into Databricks + MLflow (matei_zaharia). François Chollet argued AGI is more likely from discovering meta-rules enabling systems to adapt their own architecture than from scaling frozen knowledge stores (fchollet).

-

Robotics: from sim locomotion to “world action models”:

- RPL locomotion: a unified policy for robust perceptive locomotion across terrains, multi-direction, and payload disturbances—trained in sim and validated long-horizon in real world (Yuanhang__Zhang).

- DreamZero (NVIDIA): Jim Fan describes “World Action Models” built on a world-model backbone enabling zero-shot open-world prompting for new verbs/nouns/environments, emphasizing diversity-over-repetition data recipes and cross-embodiment transfer via pixels; claims open-source release and demos (DrJimFan, DrJimFan).

-

World-model “playable” content: Waypoint-1.1 claims a step to local, real-time world models that are coherent/controllable/playable; model is Apache 2.0 open-source per the team (overworld_ai, lcastricato).

Top tweets (by engagement)

- Sam Altman on Anthropic’s Super Bowl ads + OpenAI ads principles + Codex adoption (@sama)

- Karpathy retrospective: “vibe coding” → “agentic engineering” (@karpathy)

- Gemini usage at scale: 10B tokens/min + 750M MAU (OfficialLoganK)

- VS Code ships agent sessions + parallel subagents + Claude/Codex support (@code)

- GitHub: Claude + Codex available via Copilot Pro+/Enterprise (@github)

- METR: GPT-5.2 “high” ~6.6h time horizon on software tasks (@METR_Evals)

- Arena: Grok-Imagine-Video takes #1 image-to-video leaderboard (@arena)

- Sundar: Alphabet FY results; Gemini 3 adoption fastest (@sundarpichai)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Qwen3-Coder-Next Model Release

-

Qwen/Qwen3-Coder-Next · Hugging Face (Activity: 1161): Qwen3-Coder-Next is a language model designed for coding tasks, featuring

3B activated parametersout of80B total, achieving performance comparable to models with10-20xmore active parameters. It supports256kcontext length, advanced agentic capabilities, and long-horizon reasoning, making it suitable for integration with various IDEs. The architecture includes48 layers, gated attention mechanisms, and a mixture of experts. Deployment can be done using SGLang or vLLM, requiring specific versions for optimal performance. More details are available in the original article. One commenter expressed skepticism about the model’s performance, questioning if a3B activated parametermodel can truly match the quality of larger models like Sonnet 4.5, indicating a need for further validation of these claims.- danielhanchen discusses the release of dynamic Unsloth GGUFs for Qwen3-Coder-Next, highlighting upcoming releases of Fp8-Dynamic and MXFP4 MoE GGUFs. These formats are designed to optimize model performance and efficiency, particularly in local environments. A guide is also provided for using Claude Code / Codex locally with Qwen3-Coder-Next, which could be beneficial for developers looking to integrate these models into their workflows.

- Ok_Knowledge_8259 raises skepticism about the claim that a 3 billion activated parameter model can match the quality of larger models like Sonnet 4.5. This comment reflects a common concern in the AI community about the trade-off between model size and performance, suggesting that further empirical validation is needed to substantiate such claims.

- Septerium notes that while the original Qwen3 Next performed well in benchmarks, the user experience was lacking. This highlights a critical issue in AI model deployment where high benchmark scores do not always translate to practical usability, indicating a need for improvements in user interface and interaction design.

-

Qwen3-Coder-Next is out now! (Activity: 497): The image announces the release of Qwen3-Coder-Next, an 80 billion parameter Mixture of Experts (MoE) model with 3 billion active parameters, designed for efficient coding tasks and local deployment. It emphasizes the model’s capability to handle

256Kcontext lengths and its fast inference speed, optimized for long-horizon reasoning and complex tool use. The model requires46GBof RAM/VRAM for operation, making it suitable for high-performance environments. The image includes a performance graph comparing Qwen3-Coder-Next to other models, showcasing its efficiency and advanced capabilities. A comment questions the model’s performance level, comparing it to ‘sonnet 4.5’, indicating skepticism or curiosity about its capabilities. Another comment inquires about the feasibility of running the model with64GBof RAM, suggesting interest in its hardware requirements. Additionally, there is a remark on the absence of a comparison with ‘Devstral 2’, hinting at a potential gap in the performance evaluation.- A user inquired about the model’s performance, questioning if it truly reaches ‘sonnet 4.5 level’ and whether it includes ‘agentic mode’, or if the model is simply optimized for specific tests. This suggests a curiosity about the model’s real-world applicability versus benchmark performance.

- Another user shared a quick performance test using LM Studio, reporting a processing speed of ‘6 tokens/sec’ on a setup with an RTX 4070 and 14700k CPU with 80GB DDR4 3200 RAM. They also noted a comparison with ‘llama.cpp’ achieving ‘21.1 tokens/sec’, indicating a significant difference in performance metrics between the two setups.

- A technical question was raised about the feasibility of running the model with ‘64GB of RAM’ and no VRAM, highlighting concerns about hardware requirements and accessibility for users without high-end GPUs.

2. ACE-Step 1.5 Audio Model Launch

-

ACE-Step-1.5 has just been released. It’s an MIT-licensed open source audio generative model with performance close to commercial platforms like Suno (Activity: 744): ACE-Step-1.5 is an open-source audio generative model released under the MIT license, designed to rival commercial platforms like Suno. It supports LoRAs, offers multiple models for various needs, and includes features like cover and repainting. The model is integrated with Comfy and available for demonstration on HuggingFace. This release marks a significant advancement in open-source audio generation, closely matching the capabilities of leading proprietary solutions. A notable comment highlights the potential impact of a recently leaked

300TBdataset, suggesting future models might leverage this data for training. Another comment encourages support for the official model research organization, ACE Studio.- A user compared the performance of ACE-Step-1.5 with Suno V5 using the same prompt, highlighting that while ACE-Step-1.5 is impressive for an open-source model, it does not yet match the quality of Suno V5. The user specifically noted that the cover feature of ACE-Step-1.5 is currently not very useful, indicating room for improvement in this area. They provided audio links for direct comparison: Suno V5 and ACE 1.5.

- Another user pointed out that the demo prompts for ACE-Step-1.5 seem overly detailed, yet the model appears to ignore most of the instructions. This suggests potential issues with the model’s ability to interpret and execute complex prompts accurately, which could be a limitation in its current implementation.

-

The open-source version of Suno is finally here: ACE-Step 1.5 (Activity: 456): ACE-Step 1.5 is an open-source music generation model that outperforms Suno on standard evaluation metrics. It can generate a complete song in approximately

2 secondson an A100 GPU and operates locally on a typical PC with around4GB VRAM, achieving under10 secondson an RTX 3090. The model supports LoRA for training custom styles with minimal data and is released under the MIT license, allowing free commercial use. The dataset includes fully authorized and synthetic data. The GitHub repository provides access to weights, training code, LoRA code, and a paper. Commenters noted the model’s significant improvements but criticized the presentation of evaluation graphs as lacking clarity. There is also a discussion on its instruction following and coherency, which are seen as inferior to Suno v3, though the model is praised for its creativity and potential as a foundational tool. Speculation about a forthcoming version 2 is also mentioned.- TheRealMasonMac highlights that ACE-Step 1.5 shows a significant improvement over its predecessor, though it still lags behind Suno v3 in terms of instruction following and coherency. However, the audio quality is noted to be good, and the model is described as creative and different from Suno, suggesting it could serve as a solid foundation for future development.

- Different_Fix_2217 provides examples of audio generated by ACE-Step 1.5, indicating that the model performs well with long, detailed prompts and can handle negative prompts. This suggests a level of flexibility and adaptability in the model’s design, which could be beneficial for users looking to experiment with different input styles.

3. Voxtral-Mini-4B Speech-Transcription Model

-

mistralai/Voxtral-Mini-4B-Realtime-2602 · Hugging Face (Activity: 266): The Voxtral Mini 4B Realtime 2602 is a cutting-edge, open-source, multilingual speech-transcription model that achieves near offline accuracy with a delay of

<500ms. It supports13 languagesand is built with a natively streaming architecture and a custom causal audio encoder, allowing configurable transcription delays from240ms to 2.4s. At a480msdelay, it matches the performance of leading offline models and realtime APIs. The model is optimized for on-device deployment with minimal hardware requirements, achieving a throughput of over12.5 tokens/second. Commenters appreciate the open-source contribution, especially the inclusion of the realtime processing part to vllm. However, there is disappointment over the lack of turn detection features, which are present in other models like Moshi’s STT, necessitating additional methods for turn detection.- The Voxtral Realtime model is designed for live transcription with configurable latency down to sub-200ms, which is crucial for applications like voice agents and real-time processing. However, it lacks speaker diarization, which is available in the batch transcription model, Voxtral Mini Transcribe V2. This feature is particularly useful for distinguishing between different speakers in a conversation, but its absence in the open model may limit its utility for some users.

- Mistral has contributed to the open-source community by integrating the realtime processing component into vLLM, enhancing the infrastructure for live transcription applications. Despite this, the model does not include turn detection, a feature present in Moshi’s STT, which requires users to implement alternative methods such as punctuation, timing, or third-party text-based solutions for turn detection.

- Context biasing, a feature that allows the model to prioritize certain words or phrases based on context, is currently only supported through Mistral’s direct API. This feature is not available in the vLLM implementation for either the new Voxtral-Mini-4B-Realtime-2602 model or the previous 3B model, limiting its accessibility for developers using the open-source version.

-

Some hard lessons learned building a private H100 cluster (Why PCIe servers failed us for training) (Activity: 530): The post discusses the challenges faced when building a private H100 cluster for training large models (70B+ parameters) and highlights why PCIe servers were inadequate. The author notes that the lack of NVLink severely limits data transfer rates during All-Reduce operations, with PCIe capping at

~128 GB/scompared to NVLink’s~900 GB/s, leading to GPU idling. Additionally, storage checkpoints for large models can reach~2.5TB, requiring rapid disk writes to prevent GPU stalls, which standard NFS filers cannot handle, necessitating parallel filesystems or local NVMe RAID. The author also mentions the complexities of using RoCEv2 over Ethernet instead of InfiniBand, which requires careful monitoring of pause frames to avoid cluster stalls. Commenters emphasize the importance of fast NVMe over Fabrics Parallel FS for training builds to prevent GPU idling and suggest that InfiniBand should be mandatory for compute, while RoCEv2 is preferable for storage. The surprise at storage write speed issues is also noted.- A storage engineer emphasizes the importance of a fast NVMe over Fabrics Parallel File System (FS) as a critical requirement for a training build, highlighting that without adequate storage to feed GPUs, there will be significant idle time. They also recommend using Infiniband for compute, noting that RoCEv2 is often preferable for storage. This comment underscores the often-overlooked aspect of shared storage in training workflows.

- A user expresses surprise at the storage write speed being a bottleneck, indicating that this is an unexpected issue for many. This highlights a common misconception in building training clusters, where the focus is often on compute power rather than the supporting infrastructure like storage, which can become a critical pinch point.

- Another user proposes a theoretical solution involving milli-second distributed RAM with automatic hardware mapping of page faults, suggesting that such an innovation could simplify cluster management significantly. This comment reflects on the broader issue of addressing the right problems in system architecture.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Anthropic vs OpenAI Ad-Free Debate

-

Sam’s response to Anthropic remaining ad-free (Activity: 1536): Sam Altman responded to Anthropic’s decision to remain ad-free, highlighting a competitive dynamic in the AI space. The discussion references a Claude Ad Campaign and suggests that more Texans use ChatGPT for free than the total number of Claude users in the US, indicating a significant user base disparity. This reflects ongoing competition between AI companies, reminiscent of historical tech rivalries like Microsoft and Apple. Commenters draw parallels between the current AI competition and past tech rivalries, suggesting a public display of competition while potentially collaborating privately.

- BuildwithVignesh highlights the effectiveness of the Claude Ad Campaign, suggesting that it has successfully captured attention despite the competitive landscape. The campaign’s impact is implied to be significant, although specific metrics or outcomes are not detailed in the comment.

- LimiDrain provides a comparative analysis, stating that ‘more Texans use ChatGPT for free than total people use Claude in the US’. This suggests a significant disparity in user base size between ChatGPT and Claude, indicating ChatGPT’s broader reach and adoption in the market.

- Eyelbee references a past statement by Sam, noting that he found AI ads disturbing a year ago. This comment suggests a potential inconsistency or evolution in Sam’s stance on AI advertising, especially in light of Anthropic’s decision to remain ad-free, which could be seen as a critique of ad-based models.

-

Anthropic declared a plan for Claude to remain ad-free (Activity: 1555): Anthropic has announced a commitment to keep its AI assistant, Claude, ad-free, emphasizing its role as a tool for work and deep thinking. This decision is highlighted in a blog post titled ‘Claude is a space to think,’ which underscores the company’s dedication to maintaining a distraction-free environment for users. The announcement contrasts with other AI models that may incorporate ads, positioning Claude as a premium, focused tool for productivity. Commenters note that while Claude is ad-free, its free tier is highly limited, making it less accessible without payment. This has sparked debate about the practicality of its ad-free claim, as users may still need to pay for effective use, contrasting with other AI models that offer more generous free usage.

- ostroia points out that while Claude is ad-free, it has strict limitations on its free tier, making it mostly unusable for anything beyond quick questions. This raises questions about the practicality of boasting about being ad-free when the product requires payment to be truly usable.

- seraphius highlights the potential negative impact of ads on platforms, noting that ads can shift the focus of executives towards ‘advertiser friendliness,’ which can weaken the platform’s integrity. This is compared to the situation on YouTube, where ad-driven decisions have significantly influenced content and platform policies.

-

Sam Altman’s response to the Anthropic Super Bowl ad. He said, “More Texans use ChatGPT for free than total people use Claude in the US” (Activity: 1394): The image captures Sam Altman’s critique of Anthropic’s Super Bowl ad, where he claims that more Texans use ChatGPT for free than the total number of people using Claude in the US. Altman accuses Anthropic of being dishonest in their advertising and contrasts OpenAI’s commitment to free access with Anthropic’s approach, which he describes as controlling and expensive. He also expresses confidence in OpenAI’s Codex and emphasizes the importance of making AI accessible to developers. Commenters debate the hypocrisy of Altman’s statement, noting that OpenAI also imposes restrictions on AI usage, as seen with their ‘nanny bot’ in version 5.2. There is also skepticism about Anthropic’s alleged blocking of OpenAI from using Claude for coding.

- AuspiciousApple highlights the competitive tension between OpenAI and Anthropic, noting that Sam Altman’s detailed response to Anthropic’s ad suggests a deeper concern about competition. This reflects the broader industry dynamics where major AI companies are closely monitoring each other’s moves, indicating a highly competitive landscape.

- owlbehome criticizes OpenAI’s approach to AI control, pointing out the perceived hypocrisy in Sam Altman’s statement about Anthropic’s control over AI. The comment references OpenAI’s own restrictions in version 5.2, suggesting that both companies impose significant limitations on AI usage, which is a common critique in the AI community regarding the balance between safety and usability.

- RentedTuxedo discusses the importance of competition in the AI industry, arguing that more players in the market benefit consumers. The comment criticizes the tribalism among users who show strong allegiance to specific companies, emphasizing that consumer choice should be based on performance rather than brand loyalty. This reflects a broader sentiment that healthy competition drives innovation and better products.

-

Anthropic mocks OpenAI’s ChatGPT ad plans and pledges ad-free Claude (Activity: 813): Anthropic has announced that its AI model, Claude, will remain ad-free, contrasting with OpenAI’s plans to introduce ads in ChatGPT. This decision was highlighted in a satirical ad mocking OpenAI’s approach, emphasizing Anthropic’s commitment to an ad-free experience. The move is seen as a strategic differentiation in the competitive AI landscape, where monetization strategies are evolving. The Verge provides further details on this development. Commenters express skepticism about Anthropic’s ad-free pledge, suggesting financial pressures may eventually lead to ads, similar to trends in streaming services.

-

Anthropic laughs at OpenAI (Activity: 485): The Reddit post humorously highlights a competitive jab from Anthropic towards OpenAI, suggesting a rivalry between the two companies in the large language model (LLM) space. The post does not provide specific technical details or benchmarks but implies a competitive atmosphere in the AI industry, reminiscent of past corporate rivalries such as Samsung vs. Apple. The external link is unrelated to the main post, focusing instead on fitness advice for achieving a ‘six-pack.’ The comments reflect a mix of amusement and skepticism, with users drawing parallels to past corporate rivalries and expressing hope that the situation does not backfire on Anthropic, similar to how Samsung’s past marketing strategies did.

- ClankerCore highlights the technical execution of the AI in the ad, noting the use of a human model with AI overlays. The comment emphasizes the subtle adjustments made to the AI’s behavior, particularly in eye movement, which adds a layer of realism to the portrayal. This suggests a sophisticated blend of human and AI elements to enhance the advertisement’s impact.

- The comment by ClankerCore also critiques the performance of Anthropic’s Claude, pointing out its inefficiency in handling simple arithmetic operations like ‘2+2’. The user mentions that such operations consume a significant portion of the token limit for plus users, indicating potential limitations in Claude’s design or token management system.

- ClankerCore’s analysis suggests that while the marketing execution is impressive, the underlying AI technology, specifically Claude, may not be as efficient or user-friendly for non-coding tasks. This highlights a potential gap between the marketing portrayal and the actual performance of the AI product.

-

Sam Altman response for Anthropic being ad-free (Activity: 1556): Sam Altman responded to a tweet about Anthropic being ad-free, which seems to be a reaction to a recent Claude ad campaign. The tweet and subsequent comments suggest a competitive tension between AI companies, with Altman emphasizing that they are not ‘stupid’ in their strategic decisions. This exchange highlights the ongoing rivalry in the AI space, particularly between OpenAI and Anthropic. Commenters noted the competitive nature of the AI industry, comparing it to the rivalry between brands like Coke and Pepsi. Some expressed a desire for more lighthearted exchanges between companies, while others critiqued Altman’s defensive tone.

-

Official: Anthropic declared a plan for Claude to remain ad-free (Activity: 2916): Anthropic has officially announced that their AI, Claude, will remain ad-free, as stated in a tweet. This decision aligns with their vision of Claude being a ‘space to think’ and a helpful assistant for work and deep thinking, suggesting that advertising would conflict with these goals. The announcement is part of a broader strategy to maintain the integrity and focus of their AI services, as detailed in their full blog post. Some users express skepticism about the long-term commitment to this ad-free promise, suggesting that corporate decisions can change over time. Others humorously reference Sam Altman with a play on words, indicating a mix of hope and doubt about the future of this policy.

-

Anthropic is airing this ads mocking ChatGPT ads during the Super Bowl (Activity: 1599): Anthropic is reportedly airing ads during the Super Bowl that mock ChatGPT ads, although these ads are not yet promoting their own AI model, Claude. This strategy is reminiscent of Samsung’s past marketing tactics where they mocked Apple for not including a charger, only to follow suit later. The ads are seen as a strategic move ahead of Anthropic’s potential IPO and business pivot. Commenters suggest that the ad campaign might backfire or become outdated (’aged like milk’) once Anthropic undergoes its IPO and potentially shifts its business strategy.

2. Kling 3.0 and Omni 3.0 Launch

-

Kling 3.0 example from the official blog post (Activity: 679): Kling 3.0 showcases advanced video synthesis capabilities, notably maintaining subject consistency across different camera angles, which is a significant technical achievement. However, the audio quality is notably poor, described as sounding like it was recorded with a ‘sheet of aluminum covering the microphone,’ a common issue in video models. The visual quality, particularly in the final scene, is praised for its artistic merit, reminiscent of ‘late 90s Asian art house movies’ with its color grading and transitions. Commenters are impressed by the visual consistency and artistic quality of Kling 3.0, though they criticize the audio quality. The ability to maintain subject consistency across angles is highlighted as a technical breakthrough.

- The ability of Kling 3.0 to switch between different camera angles while maintaining subject consistency is a significant technical achievement. This feature is particularly challenging in video models, as it requires advanced understanding of spatial and temporal coherence to ensure that the subject remains believable across different perspectives.

- A notable issue with Kling 3.0 is the audio quality, which some users describe as sounding muffled, akin to being recorded with a barrier over the microphone. This is a common problem in video models, indicating that while visual realism is advancing, audio processing still lags behind and requires further development to match the visual fidelity.

- The visual quality of Kling 3.0 has been praised for its artistic merit, particularly in scenes that evoke a nostalgic, dream-like feel through color grading and highlight transitions. This suggests that the model is not only technically proficient but also capable of producing aesthetically pleasing outputs that resonate on an emotional level, similar to late 90s art house films.

-

Kling 3 is insane - Way of Kings Trailer (Activity: 1464): The post discusses the creation of a trailer for ‘Way of Kings’ using Kling 3.0, an AI tool. The creator, known as PJ Ace, shared a breakdown of the process on their X account. The trailer features a scene where a character’s appearance changes dramatically upon being sliced with a blade, showcasing the AI’s capability to render complex visual effects. Although some elements were missing, the AI’s performance was noted as impressive for its ability to recognize and replicate scenes accurately. Commenters expressed amazement at the AI’s ability to render recognizable scenes, with one noting the impressive transformation effects despite some missing elements. The discussion highlights the potential of AI in creative visual media.

-

Kling 3 is insane - Way of Kings Trailer (Activity: 1470): The post discusses the creation of a trailer for ‘Way of Kings’ using Kling 3.0, an AI tool. The creator, known as PJ Ace, who is also recognized for work on a Zelda trailer, shared a breakdown of the process on their X account. The trailer features a scene where a character’s appearance changes dramatically upon being sliced with a blade, showcasing the AI’s capability to render complex visual transformations. Although some elements were missing, the AI’s performance was noted as impressive by viewers. Commenters expressed amazement at the AI’s ability to create recognizable scenes and perform complex visual effects, despite some missing elements. The discussion highlights the potential of AI in creative media production.

-

Been waiting Kling 3 for weeks. Today you can finally see why it’s been worth the wait. (Activity: 19): Kling 3.0 introduces significant updates with features like

3-15s multi-shot sequences,native audio with multiple characters, and the ability toupload/record video characters as referenceensuring consistent voices. This release aims to enhance the user experience in creating AI-driven video content, offering more dynamic and realistic outputs. Users can explore these features on the Higgsfield AI platform. The community response highlights enthusiasm for the realistic effects, such as the ‘shaky cam’, which adds to the visual authenticity of the generated content. There is also a call to action for users to engage with the community by sharing their AI videos and participating in discussions on Discord.- A user expressed frustration over the lack of clear information distinguishing the differences between the ‘Omni’ and ‘3’ models, highlighting a common issue in tech marketing where specifications and improvements are not clearly communicated. This can lead to confusion among users trying to understand the value proposition of new releases.

-

KLING 3.0 is here: testing extensively on Higgsfield (unlimited access) – full observation with best use cases on AI video generation model (Activity: 12): KLING 3.0 has been released, focusing on extensive testing on the Higgsfield platform, which offers unlimited access for AI video generation. The update highlights full observation capabilities and optimal use cases for the model, potentially enhancing video generation tasks. However, the post lacks detailed technical specifications or benchmarks of the model’s performance improvements over previous versions. The comments reflect skepticism and frustration, with users perceiving the post as an advertisement for Higgsfield rather than a substantive technical update. There is also confusion about the relevance of the post to VEO3, indicating a possible disconnect between the announcement and the community’s interests.

3. GPT 5.2 and ARC-AGI Benchmarks

-

OpenAI seems to have subjected GPT 5.2 to some pretty crazy nerfing. (Activity: 1100): The image presents a graph depicting the performance of “GPT-5-Thinking” on IQ tests over time, with a notable decline in early 2026. This suggests that OpenAI may have reduced the capabilities of GPT-5.2, possibly as part of a strategic adjustment or due to resource constraints during training. The graph annotations indicate transitions between different versions of the AI, hinting at changes in its capabilities or architecture. The comments suggest that users have noticed a decrease in performance, possibly due to resource allocation for training or in anticipation of new releases like GPT 5.3 or DeepSeek v4. Commenters speculate that the perceived decline in performance might be due to resource limitations during training or strategic adjustments by OpenAI. Some users express dissatisfaction with the current performance compared to competitors like Gemini, while others anticipate improvements in future versions.

- nivvis highlights a common issue during model training phases, where companies like OpenAI and Anthropic face GPU/TPU limitations. This necessitates reallocating resources from inference to training, which can temporarily degrade performance. This is not unique to OpenAI; Anthropic’s Opus has also been affected, likely in preparation for upcoming releases like DeepSeek v4.

- xirzon suggests that significant performance drops in technical services, such as those experienced with GPT 5.2, are often due to partial or total service outages. This implies that the observed ‘nerfing’ might not be a deliberate downgrade but rather a temporary issue related to service availability.

- ThadeousCheeks notes a similar decline in Google’s performance, particularly in tasks like cleaning up slide decks. This suggests a broader trend of performance issues across major AI services, possibly linked to resource reallocation or other operational challenges.

-

New SOTA achieved on ARC-AGI (Activity: 622): The image illustrates a new state-of-the-art (SOTA) achievement on the ARC-AGI benchmark by a model based on GPT-5.2. This model, developed by Johan Land, achieved a score of

72.9%with a cost of$38.9per task, marking a significant improvement from the previous score of54.2%. The ARC-AGI benchmark, which was introduced less than a year ago, has seen rapid advancements, with the initial top score being only4%. The model employs a bespoke refinement approach, integrating multiple methodologies to enhance performance. Commenters note the rapid progress in ARC-AGI benchmark scores, expressing surprise at reaching over70%so quickly, though some highlight the high cost per task as a concern. There is anticipation for the next version, ARC-AGI-3, expected to launch in March 2026, as ARC-AGI-2 approaches saturation.- The ARC-AGI benchmark, which was introduced less than a year ago, has seen rapid progress with the latest state-of-the-art (SOTA) result reaching 72.9%. This is a significant improvement from the initial release score of 4% and the previous best of 54.2%. The benchmark’s quick evolution highlights the fast-paced advancements in AI capabilities.

- The cost of achieving high performance on the ARC-AGI benchmark is a point of discussion, with current solutions costing around $40 per task. There is interest in reducing this cost to $1 per task while maintaining or improving the performance to over 90%, which would represent a significant efficiency improvement.

- The ARC-AGI benchmark uses an exponential scale on its x-axis, indicating that moving towards the top right of the graph typically involves increasing computational resources to achieve better results. The ideal position is the top left, which would signify high performance with minimal compute, emphasizing efficiency over brute force.

-

Does anyone else have the same experience with 5.2? (Activity: 696): The image is a meme that humorously critiques the handling of custom instructions by GPT version 5.2, particularly in its ‘Thinking’ mode. The meme suggests that the model may not effectively process or retain user-provided custom instructions, as depicted by the character’s surprise when the instructions catch fire. This reflects user frustrations with the model’s limitations in handling specific tasks or instructions, possibly due to efforts to prevent jailbreaks or misuse. Commenters express dissatisfaction with GPT 5.2’s handling of custom instructions and memory, noting that explicit directions are often required for the model to access certain information, which they find cumbersome.

- NoWheel9556 highlights that the update to version 5.2 seems to have been aimed at preventing jailbreaks, which may have inadvertently affected other functionalities. This suggests a trade-off between security measures and user experience, potentially impacting how the model processes certain tasks.

- FilthyCasualTrader points out a specific usability issue in version 5.2, where users must explicitly direct the model to look at certain data, such as ‘attachments in Projects folder or entries in Saved Memories’. This indicates a regression in intuitive data handling, requiring more explicit instructions from users.

- MangoBingshuu mentions a problem with the Gemini pro model, where it tends to ignore instructions after a few prompts. This suggests a potential issue with instruction retention or prompt management, which could affect the model’s reliability in maintaining context over extended interactions.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5.1

1. Cutting-Edge Models, Coders, and Routers

-

Qwen3 Coder Next Codes Circles Around GPT Giants: Qwen3-Coder-Next emerged as a standout local coding model, with users on Unsloth, Hugging Face, and LM Studio reporting it outperforming GPT‑OSS 120B while running efficiently from GGUF quantizations like MXFP4_MOE and even fixing long‑standing

glm flashbugs; Unsloth hosts the main GGUF release at unsloth/Qwen3-Coder-Next-GGUF, and a Reddit thread documents an update that “now produces much better code” for the refreshed GGUFs at this post.- Engineers are pushing VRAM optimization hard by selectively offloading FFN layers to CPU via

-otflags (and asking for a “significance chart” to rank layers by importance) while others confirm smooth vLLM inference on an RTX 5080, making Qwen3-Coder-Next a practical workhorse across Unsloth, Hugging Face, and LM Studio setups.

- Engineers are pushing VRAM optimization hard by selectively offloading FFN layers to CPU via

-

Max Router Mines Millions of Votes to Pick Your Model: LMArena announced Max, an intelligent router trained on 5+ million community votes that automatically dispatches each prompt to the “most capable model” given latency and cost, detailed in the blog post “Introducing Max” and an explainer video on YouTube.

- Users quickly started poking at Max’s behavior, noticing it sometimes claims Claude Sonnet 3.5 is backing responses while actually routing to Grok 4, prompting jokes like “Max = sonnet 5 in disguise” and raising questions about router transparency and evaluation methodology.

-

Kimi K2.5 Sneaks into Cline and VPS Racks: Kimi k2.5 went live on the developer‑oriented IDE agent Cline, announced in a Cline tweet and a Discord note promising a limited free access window for experimentation at cline.bot.

- Over on the Moonshot and Unsloth servers, engineers confirmed Kimi K2.5 can run as Kimi for Coding and discussed running it from VPS/datacenter IPs after Kimi itself green‑lit such use in a shared transcript, positioning it as a more permissive alternative to Claude for remote coding agents and OpenClaw‑style setups.

2. New Benchmarks, Datasets, and Kernel Contests

-

Judgment Day Benchmark Puts AI Ethics on Trial: AIM Intelligence and Korea AISI, with collaborators including Google DeepMind, Microsoft, and several universities, announced the Judgment Day benchmark and Judgment Day Challenge for stress‑testing AI decision‑making, with details and submission portal at aim-intelligence.com/judgement-day.

- They are soliciting adversarial attack scenarios around decisions AI must/never make, paying $50 per accepted red‑team submission and promising co‑authorship in the benchmark paper, with a Feb 10, 2026 scenario deadline and a $10,000 prize pool challenge kicking off March 21, 2026 for multimodal (text/audio/vision) jailbreaks.

-

Platinum-CoTan Spins Triple-Stack Reasoning Data: A Hugging Face user released Platinum-CoTan, a deep reasoning dataset generated through a triple‑stack pipeline Phi‑4 → DeepSeek‑R1 (70B) → Qwen‑2.5, focusing on Systems, FinTech, and Cloud domains and hosted at BlackSnowDot/Platinum-CoTan.

- The community pitched it as “high‑value technical reasoning” training material, complementary to other open datasets, for models that need long‑horizon, domain‑specific chain‑of‑thought in enterprise‑y systems and finance scenarios rather than generic math puzzles.

-

FlashInfer Contest Drops Full Kernel Workloads: The FlashInfer AI Kernel Generation Contest dataset landed on Hugging Face at flashinfer-ai/mlsys26-contest, bundling complete kernel definitions and workloads for ML systems researchers to benchmark AI‑generated kernels.

- GPU MODE’s #flashinfer channel confirmed the repo now includes all kernels and target shapes so contestants can train/eval model‑written CUDA/Triton code offline, while Modal credits and team‑formation logistics dominated meta‑discussion about running those workloads at scale.

3. Training & Inference Tooling: GPUs, Quantization, and Caches

... [Content truncated due to size limits]